One day, a wormhole appears in your garden, and you retrieve a book from it. The text in the book is complex and difficult to understand, resembling an alien language. How would you go about deciphering it? Would you start by analyzing whether these characters form a fixed set of symbols, much like our alphabet, or by observing the patterns in which they combine? Or perhaps you would consider enlisting the help of a large model, hoping it could assist you in understanding the book’s contents? So, can large models really learn “alien languages”?

Before attempting to learn an alien language, consider that large models have already successfully deciphered the languages of animals such as whales. Moreover, large models are capable of rapidly mastering a myriad of programming languages. So, what characteristics of an alien language would make it easier for large models to decipher? Recently, a study published in Nature Communications pointed out that the compositionality of language structure not only enhances the efficiency with which large models learn but also facilitates language acquisition for humans.

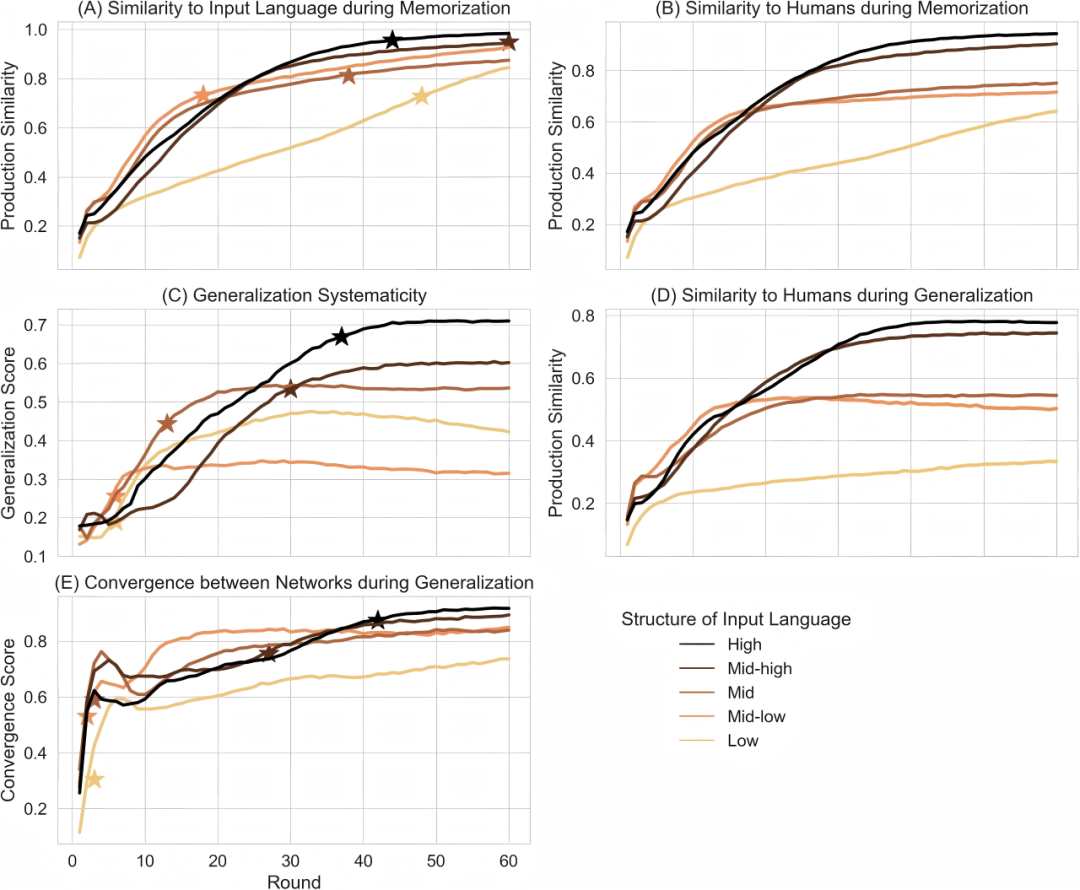

▷ Figure 1. Source: Galke, L., Ram, Y. & Raviv, L. Deep neural networks and humans both benefit from compositional language structure. Nat Commun 15, 10816 (2024). https://doi.org/10.1038/s41467-024-55158-1

What Is the Compositionality of Language?

Compositionality refers to the idea that combining two words in a language can yield a new, more complex concept. Consider two languages: In Language A, describing a “black horse” requires only combining the word for “black” with the word for “horse” to form the appropriate term, whereas in Language B the concepts of “horse,” “black,” and “black horse” are represented by three entirely distinct words. If Language A exhibits a greater number of such compound words than Language B, then it is said to possess higher compositionality.

▷ Figure 2. For aliens, which term—“zebra” or “斑马”(the horse with dots)—is more likely to lead them to associate the picture with the text? Source: AlLes

For adults, learning a language with strong compositionality requires a higher level of logical reasoning. This quality enables learners to deduce a set of generative rules instead of having to memorize each word individually. We have all experienced this: when learning English, understanding word roots makes vocabulary acquisition much easier than memorizing words directly from a vocabulary list. A language with high compositionality allows learners, after being exposed to a limited set of elements, to use these rules to produce an infinite array of expressions. In fact, research indicates that modern languages typically feature a robust compositional structure—an evolutionary development that enhances the efficiency of both learning and using the language.

A similar concept exists in programming languages. In low-level assembly language, every operation on a variable requires its own corresponding instruction. In contrast, high-level languages such as Python allow multiple operations to be consolidated into a single function call, which can, for instance, perform thousands of calculations on a matrix in one go. Large-scale models have shown advantages in understanding and applying programming languages, especially high-level languages with strong compositionality. However, previous studies have suggested that these models did not necessarily benefit from languages with a strong organizational structure.

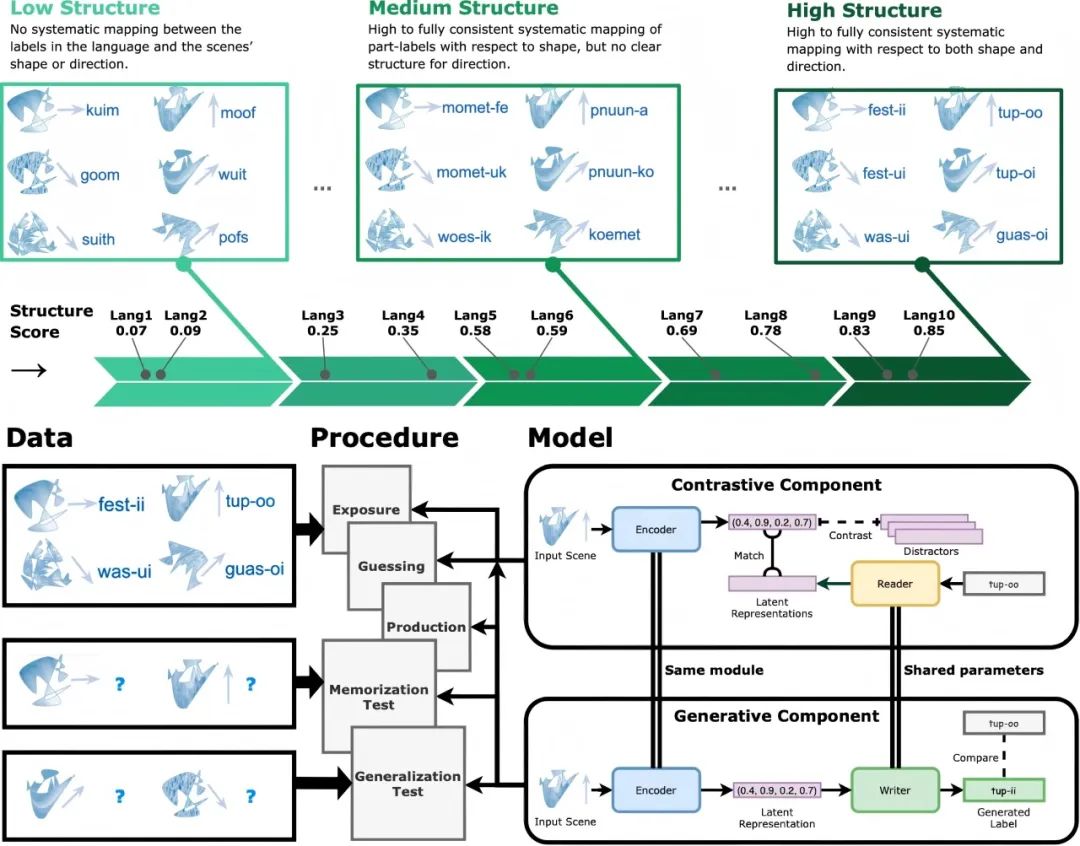

To address this, Galke et.al sought to answer the following question: When trained on more structured language inputs, do deep neural network models exhibit the same learning and generalization advantages as adult humans? The researchers employed GPT-3.5 as the pre-trained model and an RNN as the language model to be trained. They used artificially simulated languages with varying degrees of compositionality as training texts to assess the learning capabilities of both human subjects and large models when confronted with these laboratory-generated virtual languages. The results revealed that within the training texts, stronger structural compositionality corresponded with greater improvements in generalization performance. This pattern was observed in humans as well as in both pre-trained and untrained artificial neural networks (Figure 3).

▷ Figure 3. Overview of the experimental design. The researchers devised artificial languages with different levels of structure, divided into two categories: low-structure and high-structure languages. Low-structure languages lack systematic organization and compositionality, while high-structure languages exhibit both systematic organization and compositionality with respect to their shape and angular properties. The experimental procedure consisted of multiple rounds of training, each comprising an exposure phase, a guessing phase, and a generation phase. At the end of each round, memory tests and generalization tests were conducted to evaluate the models’ ability to reproduce previously seen items and to generate new items.

Highly Structured Languages Are Easier to Learn

First, the researchers explained why large-scale models do not tend to favor highly compositional languages. In short, deep neural networks usually possess an enormous capacity, which means they can easily memorize every individual linguistic expression without needing to rely on identifying compositional patterns to reinforce memory. However, this does not imply that highly compositional languages are irrelevant to large-scale models. In languages with greater compositionality, individual semantic units are reused across various contexts, leading to a higher frequency of occurrence in the training data. Consequently, these recurring semantic units and their contextualized patterns are learned more effectively through repeated exposure during the training process.

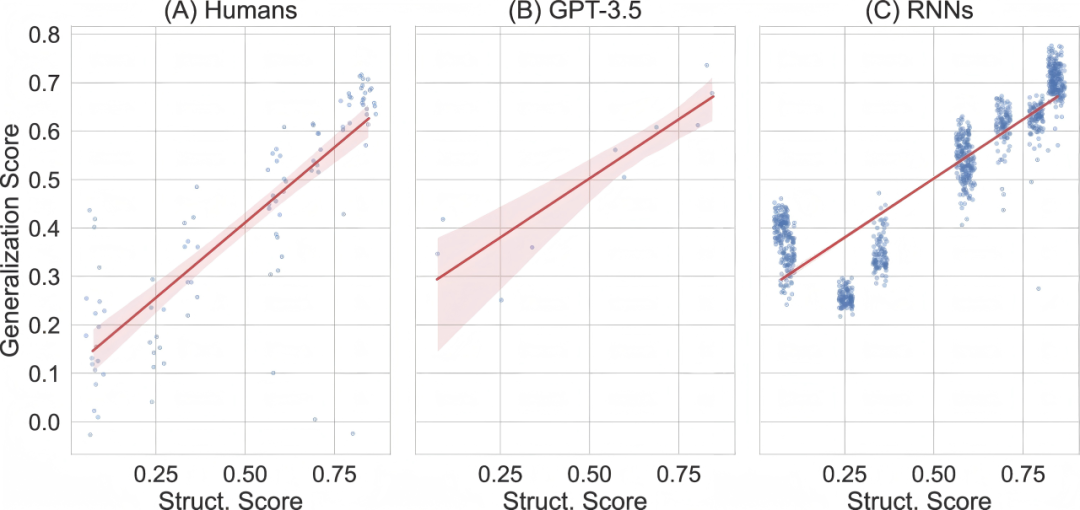

Let us return to the alien example. Suppose the alien book contains a reference table indicating that the meaning of “next” is “to the right” and that of “question” is “upwards.” Then, how would you express “up and to the right”? In a highly compositional language, one can deduce a pattern whereby combining “next” and “question” conveys the meaning “up and to the right,” whereas in a language with low compositionality such a pattern might be absent. This ability to apply learned knowledge or skills to new, unseen situations or data is known as “generalization.” When comparing languages with high compositionality to those with low compositionality, both human learners and large-scale models achieve significantly higher generalization scores with highly structured languages (see Figure 4).

▷ Figure 4. The figure shows the final generalization scores achieved by humans (A), GPT-3.5 (B), and RNN (C) across different input languages. The horizontal axis represents the structural score of the input language, and the vertical axis represents the generalization score. Each point corresponds to the overall generalization score of an input language, reflecting the extent to which the learner systematically generalizes new labels based on the learned labeling system. For instance, if a learner successfully recombines previously used components—such as combining “muif,” which represents shape, with “i,” which denotes direction, to form “muif-i”—the generalization score will be high. The shaded area around the regression line indicates the 95% confidence interval estimated via bootstrapping.

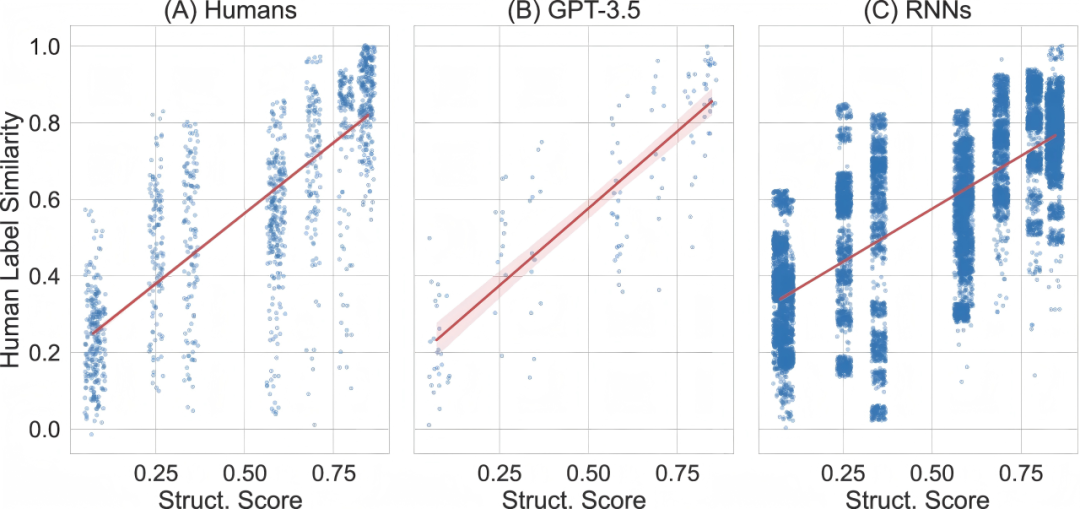

Furthermore, when training on more structured languages—that is, languages with clear grammatical rules and syntactic hierarchies—GPT-3.5 begins to exhibit prediction patterns that closely resemble those of human subjects. Figure 5B illustrates the similarity between GPT-3.5’s predictions and those of human participants for the next word in the new language under the same conditions. Similarly, Figure 5A shows that as the structure of the training text improves, the similarity among human learners during the generalization process also increases.

▷ Figure 5. The figure displays the final similarity scores between the outputs produced by humans (A), GPT-3.5 (B), and RNN (C) during the generalization process compared to human production. The horizontal axis represents the structural score of the input language, while the vertical axis indicates the production similarity score (calculated as the length-normalized edit distance), which measures how closely the labels generated by the models match those produced by human participants.

In essence, when learning highly structured languages, both large-scale models and human learners tend to converge—each opting to exploit the inherent structure of the language—which in turn leads to more accurate predictions for subsequent word generation.

Moreover, when confronted with languages that exhibit a higher degree of structural organization, large-scale models not only predict subsequent words and phrases more accurately but also learn at a faster pace (see Figure 6C). Their performance in terms of memory retention and generalization becomes more similar to that of humans as well (see Figures 6A, 6B, and 6D).

▷ Figure 6. The figure demonstrates how more structured languages lead to improved and faster reproduction of the input language (A), better generalization to novel scenarios (C), higher consistency with human participants in memory (B) and generalization (D) processes, as well as greater convergence among networks (E).

Ultimately, the degree of language structure also affects the trajectory of generalization. In highly structured languages, rules are explicit and transparent, with each semantic unit consistently corresponding to its form in a regular pattern. When learning such a language, both humans and neural networks encounter little ambiguity, and all possible generalization paths converge to a consistent outcome. In contrast, less structured languages lack clear rules and compositionality, which results in multiple plausible generalizations. Different options may all seem reasonable, leading to linguistic diversity, such as the formation of dialects.

Therefore, highly structured languages facilitate better generalization and enhance consistency in language cognition both among different neural networks and between neural networks and humans. This finding supports the view that large language models are valuable for studying human cognitive mechanisms and provides additional evidence of the similarities between human and machine language learning.

Can Large Language Models Learn Alien Languages?

In terms of language learning, large language models have been shown to possess capabilities similar to those of humans. Considering that these models also boast superior memory, it is conceivable that one day, when faced with extraterrestrials, they might indeed help us learn an alien language. However, the real challenge lies in the fact that if an alien language lacks sufficient systematic structure, our understanding and usage of it could suffer from high error rates and uncertainty.

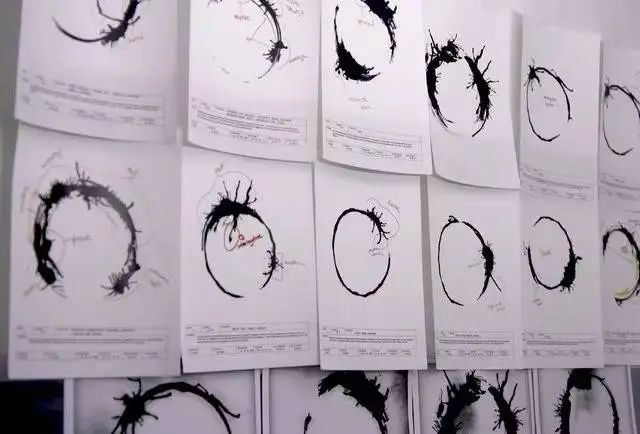

The alien language depicted in the science fiction film Arrival, with its highly non-linear and complex symbolic structure, appears to offer a mode of thinking that transcends our current cognitive abilities. Its uniqueness lies in its departure from traditional linear structures, allowing learners to grasp all the information in a sentence simultaneously and even predict future events. From the perspective of structured language learning, an alien language might exhibit even greater systematicity than terrestrial languages, providing learners with richer information and thereby enabling them to forecast what lies ahead.

▷ The alien script used by the extraterrestrials in Arrival. Source: Film Industry Network

From this viewpoint, input composed of highly structured language can enable large language models to generalize more effectively, thereby enhancing their ability to comprehend new contexts. Consequently, if an alien language were to possess a more precise and orderly structure, models trained on big data might gradually acquire and understand its grammatical rules—much like humans do—thereby eventually learning the alien language and even transforming their cognitive processes to comprehend the future, much like the protagonist in Arrival.

Returning from science fiction to reality, communication among agents powered by large language models has even given rise to new languages. However, these emergent languages often lack structure and are not easily understood by other agents [1]. This may be because agents that do not face “survival pressure” tend to generate disordered and hard-to-learn forms of communication when new languages emerge [2]. The evolutionary history of human language reflects this as well; in the absence of actual survival needs, languages often struggle to remain efficient and systematic [3].

Looking even further ahead, if one day humanity seeks to break down the language barriers between different countries and ethnic groups, we wilal also face the challenge of learning an entirely new language. At that point, if we intend to design a new language, its systematic structure must be taken fully into account. Only a language with clear, structured grammatical rules can be rapidly mastered by diverse groups worldwide and easily understood by various intelligent agents. Perhaps the book delivered to your garden by a wormhole is, in fact, an “Esperanto” dictionary sent by future humans across time.

Reference: