The 21st century is celebrated as the Information Age, a time when information has become an indispensable tool for survival and interaction with the world around us. But do we truly grasp the essence of "information"? It drives the era forward, yet its substantial impact on our lives and thought processes remains to be fully appreciated. Claude Shannon, a distinguished mathematician, laid the groundwork for information theory, offering a theoretical framework to discuss the quantification, storage, and transmission of information.

According to this theory, information serves as a "clue" that significantly reduces the uncertainty surrounding unknown events. If knowledge about certain events decreases the uncertainty of another, then that knowledge is considered information. Consider a newcomer at a company who initially knows little about it and feels uncertain about his colleagues. After sharing a few meals, he observes their behavior and gradually discerns their interests and personalities. This newfound information makes the colleagues less unfamiliar and notably diminishes the newcomer's sense of uncertainty. In our everyday lives and interpersonal interactions, information is ubiquitous, profoundly shaping our communication and cognitive processes.

From the perspective of information theory, the human brain is seen as an information-processing entity, with information acting as the neural system's fundamental currency. Neurons exchange information through electrical and neurochemical signals, constantly refining and integrating this data within the brain to shape our cognition and memory. Thus arises the question: Are electrical and chemical signals themselves information?

Neuroscientists have yet to agree on this matter [1]. Referring to information as the “base currency” in a rhetorical sense may not capture its true essence. Recently, information theory has embraced a new viewpoint: information is not a monolithic entity but consists of various forms. This approach, known as “information decomposition,” [2] seeks to break down information into three categories: unique information, redundant information, and synergistic information. This methodology offers a fresh perspective on the nature of information, aiding in a more thorough understanding of the brain's informational architecture and cognitive processes.

▷ Original paper:Luppi, Andrea I., et al. "Information decomposition and the informational architecture of the brain." Trends in Cognitive Sciences (2024).

▷ Original paper:Luppi, Andrea I., et al. "Information decomposition and the informational architecture of the brain." Trends in Cognitive Sciences (2024).

1. Information Decomposition: Multifaceted Information

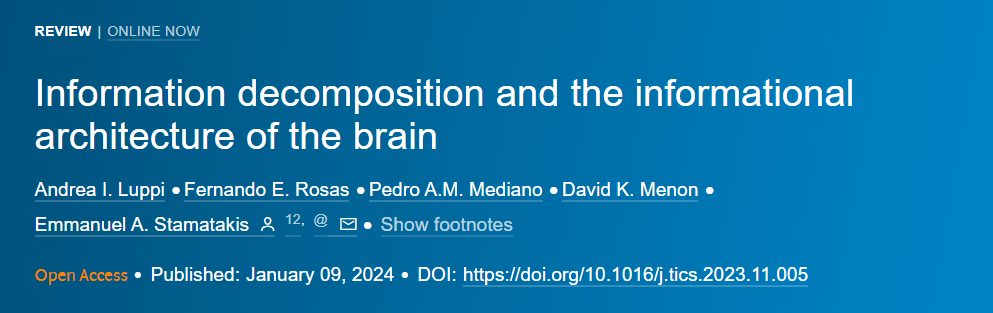

The three types of information—unique, redundant, and synergistic—each possess distinct characteristics and fulfill different roles in the process of information processing. Consider visual information processing as an example. The human visual system comprises central and peripheral visual fields. The peripheral visual field captures a broad range of environmental information, including the general location and blurred details of objects. The information in the peripheral visual field of each eye is unique because closing one eye (for example, the left eye) results in the loss of that eye's peripheral information, leaving the brain to rely solely on the other eye (right eye).

For instance, while driving, peripheral vision allows the driver to notice vehicles on the sides and behind through the rearview mirror. Should the driver close his left eye due to discomfort, he might not see a vehicle approaching quickly from the left rear side, potentially leading to an unforeseen accident. In contrast, the central visual field, which is the focal area of human vision, contains most of the detailed information we perceive. For a driver, the vehicle directly ahead in the same lane is visible within the central visual field of both eyes. Closing the left eye momentarily still allows the driver, using just the right eye, to observe the vehicle ahead. Such information is categorized as "redundant information," valued for its robustness since the same information is provided by multiple sources. This overrepresentation ensures that information is still accessible even if one source is compromised. However, the drawback of redundant information is its failure to fully leverage the brain's information-gathering capacity.

Synergistic information is the final category. Stereoscopic vision cannot be produced by a single eye. How, then, does the world appear three-dimensional to us? This effect relies on the collaboration between both eyes. Due to their different positions on the head, each eye views the same object from slightly varied perspectives. The visual cortex receives two distinct two-dimensional images, and by analyzing the binocular disparity—the differences between these images—it calculates the object's distance, thereby creating a perception of depth and three-dimensionality. In driving scenarios, the cooperation of both eyes is crucial for accurately judging the distance to vehicles or obstacles ahead. Damage to either eye significantly compromises safety. Synergistic information's key feature is its efficiency, maximizing the interaction between different parts of the brain's neural system to achieve an effect where the whole is greater than the sum of its parts, thereby playing a vital role in managing complex tasks.  ▷ Figure 1: The acorn and banana in the image symbolize unique information, the rectangle denotes redundant information, and the cube illustrates synergistic information, which requires the combined effort of both eyes to perceive depth. Figure source: Reference [2] As a highly complex system, the human body’s approach to information decomposition not only offers a more nuanced perspective on the structure of information but also finds applications in other domains, such as cellular automata, socio-economic data analysis, and artificial neural networks [3].

▷ Figure 1: The acorn and banana in the image symbolize unique information, the rectangle denotes redundant information, and the cube illustrates synergistic information, which requires the combined effort of both eyes to perceive depth. Figure source: Reference [2] As a highly complex system, the human body’s approach to information decomposition not only offers a more nuanced perspective on the structure of information but also finds applications in other domains, such as cellular automata, socio-economic data analysis, and artificial neural networks [3].

2. How does the brain integrate information?

Integration is a key concept in neuroscience and cognitive science, presenting researchers with two distinct interpretations: integration as oneness and integration as cooperation. The oneness perspective views strong correlations or synchrony between activities in different brain regions, as observed in brain data analysis, as an indication of high integration. This is based on the idea that integrated elements operate as a single unit. The more synchronous and similar the activities between two elements are, the higher their level of integration is considered. Conversely, the cooperation perspective argues that when different elements complement each other, the system's information processing capacity benefits from their interactions, signifying integration. In terms of information decomposition, integration is seen as the counterpoint to unique information.

Oneness aligns with redundant information, while cooperation aligns with synergistic information. However, traditional neuroscience often relies on correlation to deduce the integration within the nervous system, a method that struggles to distinguish between oneness and cooperation accurately. High correlation typically suggests information redundancy, whereas low correlation can indicate either uniqueness or synergistic information. To differentiate redundancy from synergy, researchers have developed the synergy-redundancy index, which reflects the balance between these two in a system. If the system's total information output exceeds the sum of its individual parts, synergy is inferred. If the sum of the parts' contributions exceeds the system's total output, redundancy is present. While intuitive, this approach has limitations in situations where synergy and redundancy coexist, and it cannot precisely identify synergistic information.

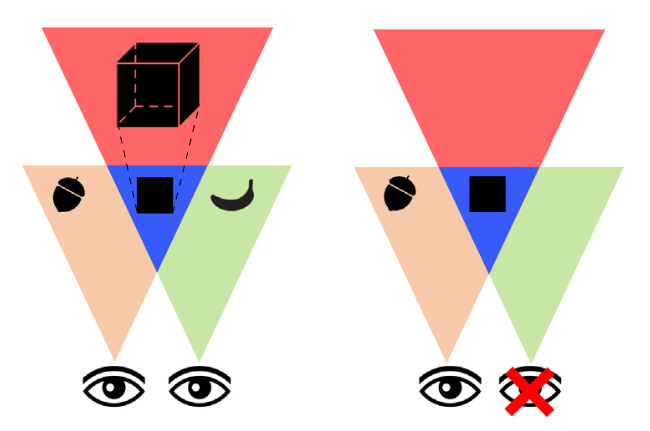

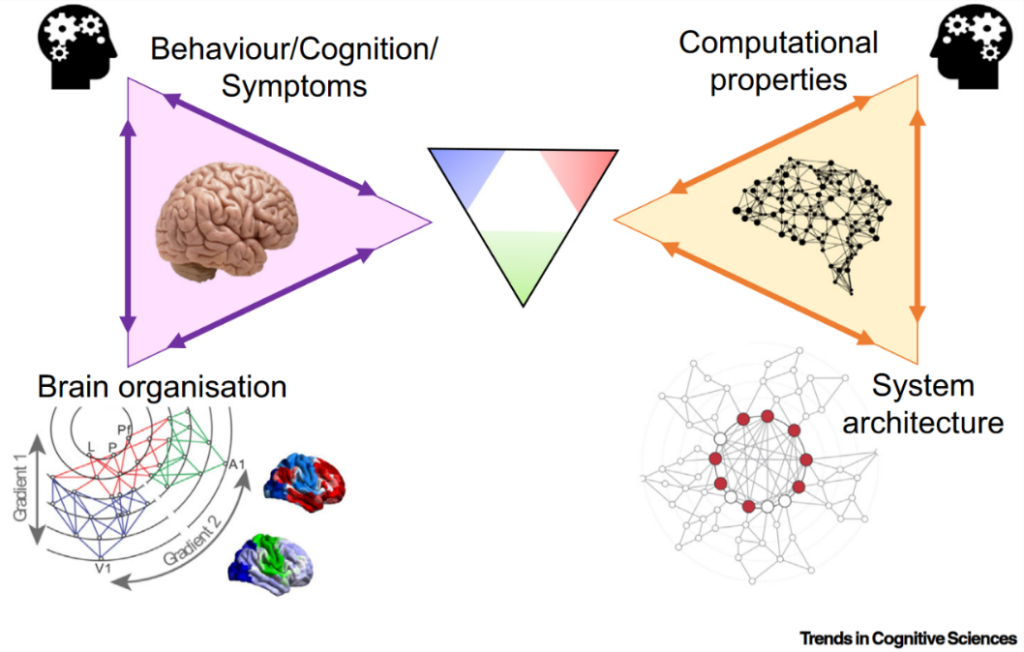

Unlike correlation-based methods or the synergy-redundancy index, information decomposition provides a more accurate analysis of information processing by calculating the system's transfer entropy (TE). For instance, if TE data show a higher transfer from time series data X to Y than from Y to X in a system, X is deemed to have a significant "influence" on Y. Considering epilepsy, characterized by disordered functional connectivity in the brain, traditional interpretations view the highly synchronous signals among patients as indicative of oneness. This suggests that redundancy in different brain regions could contribute to epileptic seizures. However, when analyzing EEG data from epilepsy patients using information decomposition, researchers discovered increases in both redundant and synergistic information transfer from subcortical to cortical regions during seizures. Furthermore, an analysis of deep electrode recordings revealed that an increase in unique information transfer from specific subcortical areas to cortical regions might be a primary trigger for cortical oscillations, offering clearer evidence for identifying seizure onset zones [4].  ▷ Figure 2: Information decomposition offers a unified framework for cognitive science, where each bi-directional arrow crossing the central triangular area represents opposing concepts in cognitive and neuroscience. One end of the arrow corresponds to a category within the information decomposition framework, and the other end differentiates between two types of information, such as integration and disintegration—the latter implying unique information, and the former encompassing both redundant and synergistic information. Localization refers to instances where an information source solely contains unique information, making it exclusively available from that source. In contrast, sources with only redundant information disseminate their information across multiple locations. Differentiation occurs when system parts exhibit distinct behaviors or do not demonstrate oneness, indicating they might be independent or complementary. Figure source: Reference [2]

▷ Figure 2: Information decomposition offers a unified framework for cognitive science, where each bi-directional arrow crossing the central triangular area represents opposing concepts in cognitive and neuroscience. One end of the arrow corresponds to a category within the information decomposition framework, and the other end differentiates between two types of information, such as integration and disintegration—the latter implying unique information, and the former encompassing both redundant and synergistic information. Localization refers to instances where an information source solely contains unique information, making it exclusively available from that source. In contrast, sources with only redundant information disseminate their information across multiple locations. Differentiation occurs when system parts exhibit distinct behaviors or do not demonstrate oneness, indicating they might be independent or complementary. Figure source: Reference [2]

3. How does the brain balance redundancy and synergy?

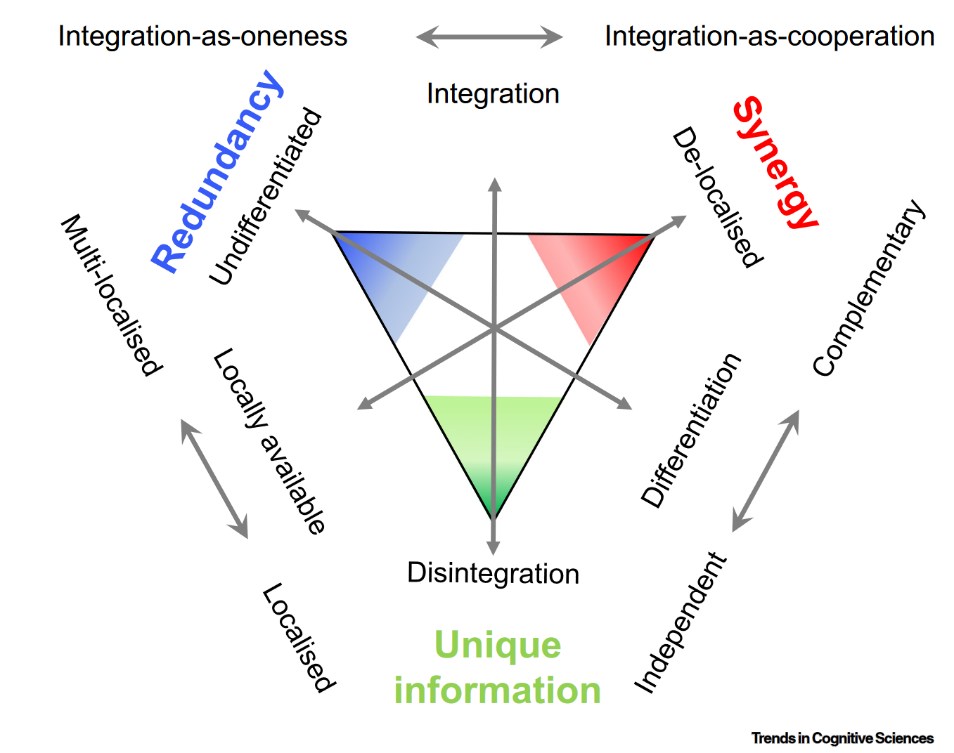

Redundancy and synergy, significant modes of interaction within the brain, have been distinguished by extensive research. A meta-analysis utilizing the NeuroSynth database, which includes over 15,000 imaging studies, revealed that redundant information is crucial for sensory-motor processing. As the brain's input-output mechanism, stable sensory-motor processing is essential for survival, with redundant interactions providing the necessary robustness. Conversely, synergistic information serves as a "global workspace" in the brain, crucial for executing higher cognitive functions. Areas of the brain exhibiting high synergy demonstrate increased aerobic glycolysis rates and a broader range of neurotransmitter receptor expressions, supporting flexible and rapid energy supply, synapse formation, and neural regulation [5]. At the macroscopic scale, human resting-state fMRI data indicate that synergy is more prevalent overall [5], although specific brain regions vary. The frontal and parietal association cortices, integral for integrating multimodal information, primarily operate through synergy. The frontal association cortex is involved in long-term planning and decision-making, while the parietal association cortex focuses on spatial localization, hand-eye coordination, and other tasks. In contrast, redundancy is more common in primary cortical areas that process unimodal information, such as the visual, somatomotor, and auditory cortices.

Compared to other primates, humans possess a more developed association cortex, suggesting a greater integration of information through cooperation and synergy, underscoring our cognitive advantages. Microscale research corroborates these macroscopic findings. Electrophysiological recordings in the prefrontal cortex reveal complex and flexible neuronal responses to stimuli and task changes, highlighting the importance of neuronal interactions. However, in the visual cortex, especially in areas V4 and V1, neuronal interactions contribute less to activity patterns [6, 7]. Artificial neural network research further supports these observations, showing that redundancy is predominant in early network stages. As learning progresses, neurons specialize, contributing more unique information. When networks learn multiple tasks, requiring flexibility to integrate different information sources, synergy strengthens.

Damage to highly synergistic neurons significantly impairs network performance. Conversely, training with randomly deactivated neurons enhances redundancy and robustness, improving resistance to damage [8]. In summary, while redundancy offers robustness and underpins sensory-motor functions in humans and many primates, it does not maximize the neural system's information-processing capacity. Synergy, associated with higher-order information processing, is more efficient and flexible, playing a crucial role in advancing human cognitive capabilities. Damage to parts of this cooperative network is likely to impair higher cognitive functions.  ▷ Figure 3: (A) The image shows brain areas where redundancy (blue) and synergy (red) predominate. Blue areas, related to primary sensory-motor processing, exhibit a modular network organization with structured connections for single modality processing, like visual information in the visual cortex. Red areas, responsible for complex cognitive processing, are associated with synaptic density, dendritic spine-related genes, and enhanced plasticity. (B) The evolutionary trajectory of synergy. While redundant information remains relatively stable across species, the human brain shows more developed synergistic information, likely due to expanded cortical areas, compared to other primates. Figure source: Reference [2]

▷ Figure 3: (A) The image shows brain areas where redundancy (blue) and synergy (red) predominate. Blue areas, related to primary sensory-motor processing, exhibit a modular network organization with structured connections for single modality processing, like visual information in the visual cortex. Red areas, responsible for complex cognitive processing, are associated with synaptic density, dendritic spine-related genes, and enhanced plasticity. (B) The evolutionary trajectory of synergy. While redundant information remains relatively stable across species, the human brain shows more developed synergistic information, likely due to expanded cortical areas, compared to other primates. Figure source: Reference [2]

4. A New Blueprint for Artificial Intelligence Design

The framework of information decomposition not only provides a comprehensive lens for examining brain information processing but also sheds light on human evolutionary advantages. Moreover, it paves the way for researchers to design AI systems that more closely mimic human intelligence. Artificial neural networks have shown impressive capabilities across various domains. A pivotal question emerges: Do these systems rely on synergy, akin to the human brain? Recent advances in artificial intelligence have largely been driven by increases in model scale. Researchers note that as AI models grow in scale and their ability to handle multitasking improves, they display more synergistic behaviors, hinting at AI systems becoming more human-like. However, the inherent fragility of synergistic actions introduces risks for AI, suggesting that future designs of AI systems should differentiate between types of information, and evolve the information decomposition framework into a universal tool for deciphering complex systems. This approach could also illuminate the inner workings of AI models, often considered "black boxes." Conversely, artificial intelligence offers a fertile ground for testing theories of information. Observations indicate that synergy intensifies when confronting complex challenges. If AI systems were engineered to favor synergistic actions, perhaps through evolutionary algorithms, might they navigate complex tasks with greater efficacy? Moreover, an AI system designed solely for synergistic actions would provide a unique lens through which to examine the benefits and limitations of synergy—a scenario beyond the reach of any biological system.

▷ Figure 4: Envisioning the information decomposition framework as a Rosetta Stone bridging biology and artificial intelligence. Just as the Rosetta Stone, inscribed with Greek, Ancient Egyptian, and other scripts, was vital for decoding Ancient Egyptian history, information processing and decomposition elucidate the connections between brain structure, functional organization, and cognitive and behavioral outcomes. Similarly, in AI systems, this framework can clarify the links between system architecture, computational abilities, and performance outcomes. Whether in the biological brain or an AI system, information processing and decomposition, transcending the medium, can serve as a universal language. Figure source: Reference [2]

▷ Figure 4: Envisioning the information decomposition framework as a Rosetta Stone bridging biology and artificial intelligence. Just as the Rosetta Stone, inscribed with Greek, Ancient Egyptian, and other scripts, was vital for decoding Ancient Egyptian history, information processing and decomposition elucidate the connections between brain structure, functional organization, and cognitive and behavioral outcomes. Similarly, in AI systems, this framework can clarify the links between system architecture, computational abilities, and performance outcomes. Whether in the biological brain or an AI system, information processing and decomposition, transcending the medium, can serve as a universal language. Figure source: Reference [2]

5. Conclusion

The rapid advancements in artificial intelligence technology often astonish us in our everyday lives. However, the complexity of the human brain vastly exceeds that of current AI capabilities, and the framework of information decomposition brings us one step closer to deciphering the brain's enigmas. What techniques will we uncover in the future to further decompose information? How can we leverage information decomposition to develop more sophisticated AI systems? Could a deeper insight into the brain's information architecture enable us to address the myriad mental health issues affecting society? Perhaps, with enough understanding of the brain's fundamental mechanisms, the creation of an artificial brain might transition from fantasy to reality.