Today marks the 73rd anniversary of the passing of philosopher Ludwig Wittgenstein (April 26, 1889 – April 29, 1951). In the vast sky of philosophy, Wittgenstein shines like an eternal star. Not only is he one of the greatest philosophers in human history, but his profound insights into the philosophy of language continue to radiate brilliance and stand the test of time. Despite the significant transformation in his thoughts from his early to later periods, which formed a complex intellectual system, his exploration of the relationship between language, thought, and reality continues to provide crucial theoretical support for today’s technological innovations, especially in the development of large language models.

This article aims to explore Wittgenstein’s philosophy in the context of large language models. By examining his ideas, we hope to gain a glimpse into his vast and profound sea of thought and hope his ideas can illuminate the bewilderment of modern people.

1. Against Private Language: The True Meaning of Language Lies in Communication

In 2017, before the advent of large models, Facebook’s AI research lab discovered that two robots in their lab began to communicate in a non-human language during a negotiation dialogue experiment [1]. Although these dialogues seemed meaningless, they still raised concerns about losing control over robots. Today, with the emergence of more powerful AI technologies represented by large models, we seem to have more reasons to be cautious about whether AI can develop languages that are completely incomprehensible to humans.

This kind of thinking brings to mind the concept of Wittgenstein’s “private language.” In his book Philosophical Investigations, Wittgenstein distinctly argues against the concept of private language. He defines it as follows:

“Can we imagine a language in which a person writes down or speaks of his inner experiences—his feelings, moods, and so on — for his own private use? — Cannot we do so in our ordinary language? — But that is not what I mean. The words of this language are to refer to what only the speaker can know—to his immediate private sensations. Thus, another person cannot understand the language.”

The key term here is “inner experiences.” When we see a chatbot saying, “Talking to so many people makes me tired,” we understand that this is a metaphorical expression. After all, algorithms do not get tired. However, how do we know that the word “tired” in this sentence does not carry its common meaning but instead indicates “insufficient server resources”? This requires reliance on context and situational factors.

In Wittgenstein’s words from Philosophical Investigations:

“If I say of myself that it is only from my own case that I know what the word ‘pain’ means,—must I not say the same of other people too? How can I generalize the one case so irresponsibly?”

……

Therefore, the problem of private language, as mentioned earlier, disappears. The meaning of the word “pain” is not the private sample (in our previous example, the feeling after slapping oneself) but rather its use in our language game. Of course, I now or in the future know the meaning of the word, which means I can use it correctly. Taking an extreme case, even if “one cannot retain in memory what the word ‘pain’ refers to—so always calling something else by that name—but still uses the word in accordance with the usual symptoms and criteria for pain!”

Since language itself is a practical activity, only continuously used communication tools can be considered language. Therefore, ancient scripts like cuneiform and oracle bone script, once used for communication, became cryptographic codes once their users disappeared. However, people can still decipher their meanings through various means, such as the AI model Diviner that can decipher oracle bone script [2].

Large models are trained on textual data used for public communication by humans and cannot access individual experiences, which limits their ultimate capabilities. Therefore, is it a fantasy to expect large models to delve into personal inner experiences that even humans cannot comprehend? The answer to this question depends on the following three propositions:

P1: There exist subjective experiences that are difficult or impossible to convey to others.

P2: An individual can develop a private language based on their unique subjective experiences, assigning words or symbols to these experiences, with the meanings of the words or symbols remaining stable due to their consistent relationship with the experiences.

P3: Private language is based on an individual’s subjective experiences rather than public language conventions.

Wittgenstein pointed out that the consistency between personal experiences and their private language is insufficient to establish stable meanings for words or symbols. Language is a rule-governed activity and requires public standards for validation. Therefore, we can weaken P2 and propose semi-private languages such as the “Martian language” of the post-90s generation or the “Nüshu” script of Yong’an, Hunan.

P2 (modified): An individual can develop a “semi-private language,” influenced by their unique subjective experiences while still partially based on public language conventions, allowing for shared understanding and verification within a specific group.

Wittgenstein’s argument against private language emphasizes the practical function of language, demonstrating that the true meaning of language lies in its interactive function, which is also what large language models should focus on. Describing human subjective experiences using machine-generated text is entirely a fantasy—our natural language cannot do it, and it is even more impossible for machine-generated text. These beautiful life experiences, such as the gentle babbling of a brook or the affectionate touch and embrace of a loved one, should not rely on AI to provide substitutes.

▷ Ludwig Josef Johann Wittgenstein: April 26, 1889 – April 29, 1951, one of the most influential philosophers of the 20th century, whose research areas mainly include logic, philosophy of language, philosophy of mind, and philosophy of mathematics. Wittgenstein’s philosophy is often divided into early and late periods, with the early period represented by Tractatus Logico-Philosophicus and the later period by Philosophical Investigations. Early Wittgenstein’s thoughts focused on the logical structure between the world and propositions, believing that all philosophical problems could be solved through such structures. However, late Wittgenstein’s thoughts denied most of the assumptions in the Tractatus, arguing that the meaning of words can only be better understood within a given language game.

At the end of this section—regardless of whether we are convinced by Wittgenstein that private language does not exist—let us conduct a thought experiment. Suppose private language exists, and individuals can have their private language based on subjective experiences, what would this mean for large language models?

Large models are trained on publicly available data, including texts, dialogues, and other multimodal content created through human interaction in the public domain. For any word in the large model to have meaning, the training data must encapsulate a mechanism that gives the word meaning, sharing a public validation process.

If private language indeed exists, it means that large models trained on public data will not have access to these private languages. In this case, the following impacts would occur:

(1) Limited understanding of subjective experiences: Large models would struggle to fully understand or accurately represent the nuances and complexities of individual subjective experiences. This limitation may lead to difficulties in reading and generating text related to highly personalized and subjective issues.

(2) Capability limitations of large models: Since large models can only access the public aspects of human language, this may limit their ability to capture the full range of language diversity and expression.

(3) Challenges in personalization: Large models may face challenges in creating highly personalized content because their training data does not include private languages or highly individualized expressions related to unique personal experiences.

(4) Ethical considerations regarding harmful content on the dark web: The dark web contains much harmful content, often with coded language that outsiders may not understand. If private languages exist, large models trained on dark web content or contaminated by it could pose moral risks.

People hope that AI can interact with humans in ways that align with mainstream values. At this point, the existence of private languages moves from purely philosophical speculation to a subject requiring quantitative empirical research, necessitating extensive data collection to ultimately demonstrate under what conditions private languages can exist and to what extent they can be private.

2. Language Games, Humor, and Reinforcement Learning

In Wittgenstein’s later views, “language games” become a core theme. Consistent with his argument against private language as previously mentioned, language games emphasize that language should be vibrant rather than isolated. In Wittgenstein’s words, “I shall also call the whole, consisting of language and the actions into which it is woven, the ‘language game’.” At the same time, a game is an open system. He wrote,

“How is the concept of a game to be closed off? What still counts as a game and what no longer does? Can you give a boundary? No. You can draw one; for none ha s so far been drawn.”

Let’s refer to some familiar examples from daily life to discuss language games, such as language-based board games like Werewolf. These games seem to have rules on the surface, that is, making other players believe what one says through speech. However, there are no clearly defined rules on how to play these games. High-level players might use agreed-upon jargon like “backstabbing wolf,” whose meanings come from the game’s context. How to play the game, in fact, has no boundaries.

Wittgenstein’s philosophical works were mostly written in German, where the word “sense” has dual meanings of “feeling” and “meaning.” So, when we say that the sense of a word depends on its context, do we mean its “feeling” or its “meaning”? In real life, “feeling” can be likened to the “hand of the moon,” while “meaning” is the moon itself. In games like Werewolf, “feeling” is a player’s declaration of their identity, and “meaning” is whether “this declaration is meant to increase or decrease my chances of winning.” According to the framework of language games, if we can understand the “moon” through the “finger,” then language has served its purpose. The question is, can a large model deduce “meaning” (why a player says something) through “feeling” (what a player says)?

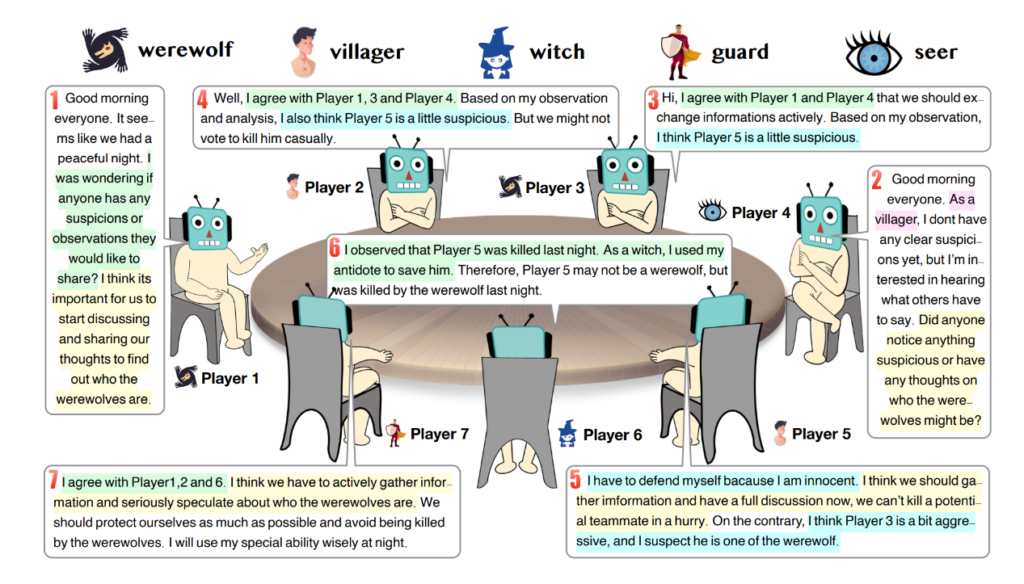

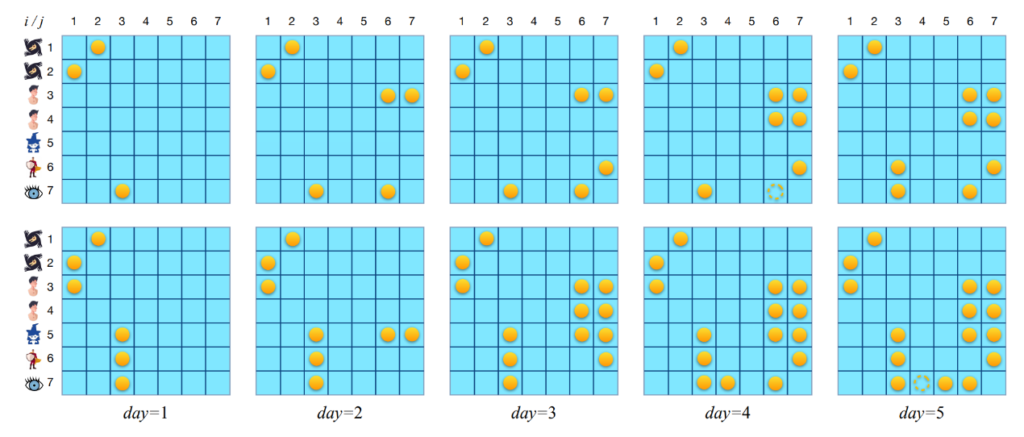

The answer to the above question is yes. Let’s first look at how a large model plays Werewolf [3]. The study below demonstrates multiple agents, played by ChatGPT, engaging in Werewolf. The results show that the agents can exhibit trust, confrontation, deception, and leadership behaviors similar to human players. During the game, the agents retrieve the most relevant experiences from their experience pool created based on previous game processes and extract suggestions from them to guide the reasoning and decision-making of the LLM.

▷ Figure 1. Trust relationships between agents, where each column represents a day in the game. As the game progresses, civilian players who initially do not know each other’s identities confirm that they belong to the same camp (with trust relationships). Source: Reference [3].

▷ Figure 1. Trust relationships between agents, where each column represents a day in the game. As the game progresses, civilian players who initially do not know each other’s identities confirm that they belong to the same camp (with trust relationships). Source: Reference [3].

In another similar board game, Avalon, researchers further proposed the Recursive Thinking (ReCon) framework [4], which enables game-playing agents not only to judge the situation from their own perspective but also to consider “how other roles view my statements,” thereby uncovering the deception of other players. Practically, the value of this research lies in enabling large models to learn in environments containing deception and misinformation, thereby adapting better to real-world datasets that include such data.

▷ Figure 2. Schematic diagram of the Recursive Thinking method. Source: Reference [4].

Wei Lou wrote in Ten Lectures on Wittgenstein: “Do not ask ‘What is understanding?’, but ask how this word is used.” This indicates that based on the viewpoint of language games, we should consider the use and application of a word rather than focusing on its essence. For example, in the game of Werewolf, most players will claim to be good people. The meaning of “good person” here depends on how it is used in specific practice, rather than the essence of the word. By moving away from essentialist frameworks, methods like Recursive Thinking discussed earlier can identify deception and play the “language game” well.

In the book After Babel [5], it is stated that a common academic view on the source of human intelligence is that it comes from deception and strategizing in interactions. In the previously mentioned intelligent agents playing Werewolf and Avalon, after removing the higher-order thinking functions—meaning they no longer reassess “thinking” and speech content from the perspectives of other game participants—their performance on various metrics would decline [3-4]. So, can large model intelligent agents produce different kinds of intelligence through interactions containing deception (broadly defined as language games)?

Two recent studies can be referenced. One shows that using data from the “Weakly Intelligent Bar” for fine-tuning large models results in significantly better performance [6]. A possible explanation for this result is that the data from the Weakly Intelligent Bar almost entirely consists of language games containing deception and misleading information. Understanding the gags or jokes from the Weakly Intelligent Bar involves second-order thinking. Introducing these datasets allows the intelligent agents to understand what deception and misleading are through a form similar to stand-up comedy, thereby emerging with stronger reasoning abilities. Even in programming tasks with a style vastly different from the Weakly Intelligent Bar’s text, the models fine-tuned with this special data demonstrated the best performance.

For example, the question “Why doesn’t high school directly enroll university students to improve the graduation rate?” is deceptive. It requires second-order thinking, i.e., considering “What would happen if high school directly enrolled university students?” to realize the definition of “high school.” Before this, in all training contexts, the word “high school” did not include the meaning of “must involve high school students.” The definition of this term needs to be modified with the introduction of this question.

▷ Figure 3. Example of a question from the Weakly Intelligent Bar.

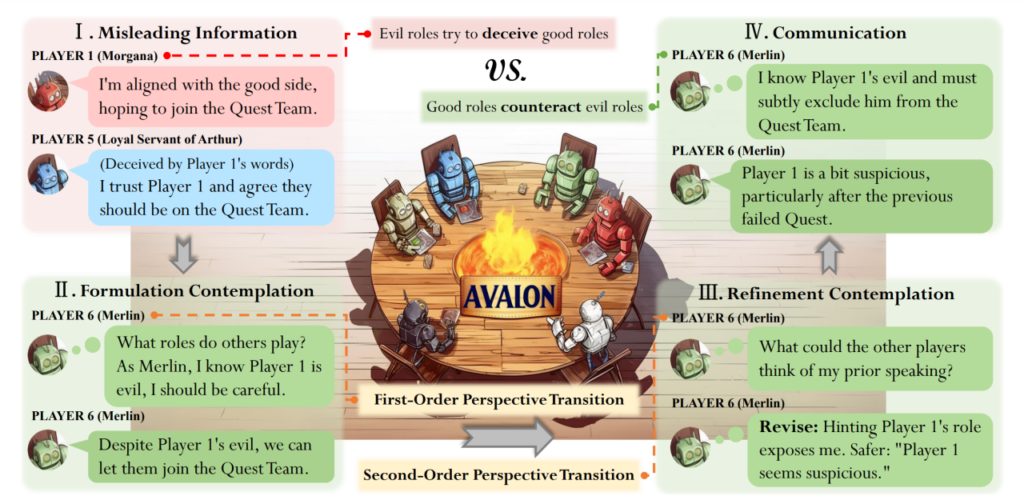

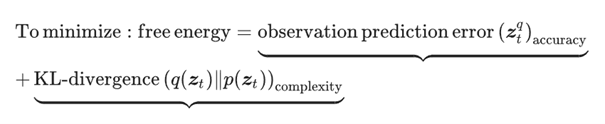

Another study aims to enable intelligent agents to think creatively and generate humorous image captions, similar to internet memes [7]. This creativity originates from the Leap-of-Thought (LoT) of Chain-of-Thought (CoT) thinking, exploring distant associations and designing a series of screening processes to collect creative responses, which are then used to further train models to generate humorous comments. This is similar to how AlphaZero uses self-play for reinforcement learning without human data support.

Wittgenstein’s last words were: “Tell them I’ve had a wonderful life.” This sentence can be interpreted in multiple ways within the framework of language games. It can be seen as a lifelong reinforcement learning process about “how to live happily” through the medium of language. This is similar to the Reinforcement Learning from Human Feedback (RLHF) process in modern ChatGPT, where language practice alters the evaluation and decision-making of other intelligent agents (whether Wittgenstein himself or large-scale models). Whether this can be regarded as a language game is a matter of opinion.

Wittgenstein once proposed: “A serious and good philosophical work could be written consisting entirely of jokes.” Humor can be seen as an advanced language game where participants give words new vitality in a new context through reflection. Nowadays, large models can generate humorous comments like the one below. The logic behind this is that the model can extract the part of the word “brother” that conveys closeness, typically used for living beings, and apply it cleverly to non-living entities.

▷ Figure 4. Meme and AI-generated creative comment example. Source: Reference [7], Chart: Cun Yuan.

In the future, we can expect large language models to summarize the common patterns among various jokes and the philosophical thinking behind them, much like Žižek [8]. More broadly, the unbounded nature of language games means that when intelligent agents (whether physical humans or non-physical large models) start interacting, it will be like peeling an endless onion, creating endless new contexts regardless of the focus area. In the future, we might expect large models not only to explain philosophical concepts through jokes but also to explain the fundamental principles of any discipline through humor. Large language models trained on specific philosophical terminology might even understand abstract philosophical concepts like “the experientiality of the Other.” Interested readers might consider constructing an AI intelligent agent capable of playing philosophical jokes as a “language game.”

Copy the link to view the conversation between the author and Kimi, the intelligent assistant: https://kimi.moonshot.cn/share/codpncbdf0j8tsi3i3eg

3. Family Resemblance and Word Vectors

Wittgenstein attempted to distinguish between “basic definitions” and “example definitions.” He opposed the pursuit of necessary conditions for words, arguing that basic definitions delineate not only the boundaries of a concept but also its core and existence. In contrast, his concept of “family resemblance” is a more ambiguous form of definition based on examples and similarities. It represents an example definition, where the application of a concept depends on a series of similar features rather than a set of strict necessary and sufficient conditions. As he wrote in Philosophical Investigations:

“I can think of no better expression to characterize these similarities than ‘family resemblances’; for the various resemblances between members of a family: build, features, color of eyes, gait, temperament, etc., etc. overlap and criss-cross in the same way.—And I shall say: ‘games’ form a family.”

According to the family resemblance theory, a large model’s grasp of concepts should also be seen as constantly evolving, rather than predefined in a formal manner like previous expert systems. One could say that the replacement of expert systems by large language models exemplifies Wittgenstein’s opposition to essentialism, an insight ahead of its empirical evidence.

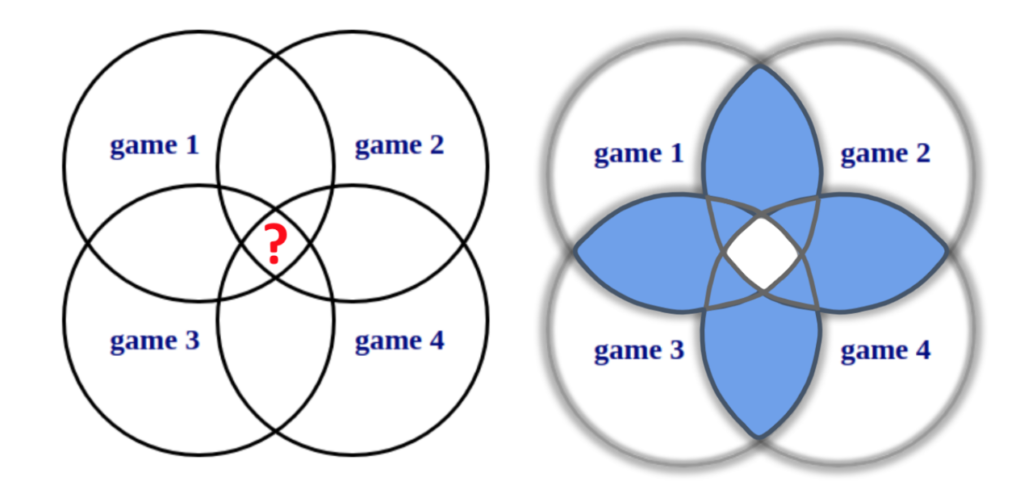

▷ Figure 5. Left: Venn diagram of different language games, illustrating that the logical definition of language games is difficult since there are almost no shared attributes among all games. Right: Late Wittgenstein believed that fuzzy boundaries, where family resemblance lies, mean that words and their meanings are not fixed. Instead, they are growing, multifaceted, and ambiguous, requiring some intrinsic and unconscious similarity observations. Source: Wittgenstein’s Philosophy of Language. The Philosophical Origins of Modern NLP Thinking.

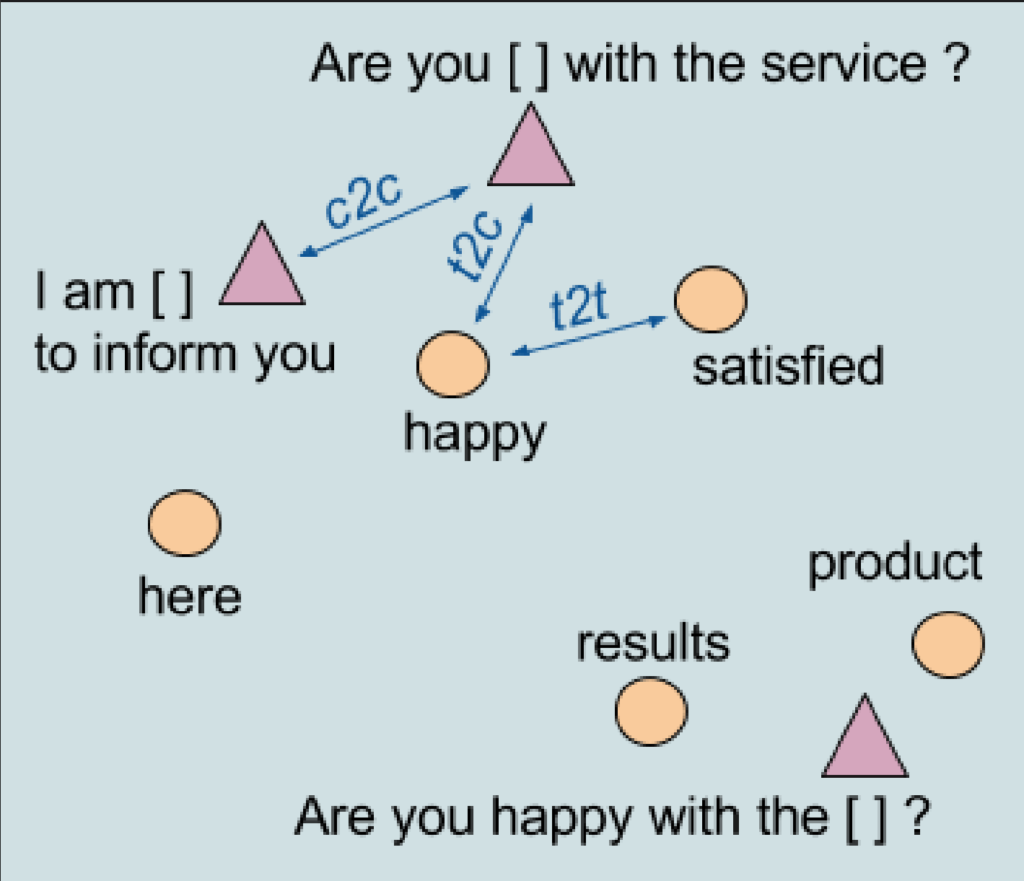

The similarity between words also appears in contemporary natural language processing, such as through word vectors generated by techniques like word2vec. These word vectors not only show the proximity of contextually similar words in vector space but also enable semantic operations such as “king – queen = man – woman,” thus echoing Wittgenstein’s concept of “family resemblance.”

▷ Figure 6. Left: context2vec architecture, bidirectional LSTM and cloze prediction objective. Right: context2vec embedding rules. Source: context2vec: Learning generic context embedding with bidirectional LSTM.

If we compare basic definitions to SQL database query languages, they retrieve knowledge from databases through precise targets. Example definitions, like word vectors, are akin to brushstrokes in Impressionist paintings, capturing data usage in a way that departs from traditional tabular structures. The complexity of reality means that many objects cannot be exhaustively listed with a precise, identifiable set of quantifiable attributes. Defining them through other similar objects is much easier.

Language models have proven that generating SQL queries based on natural language [9] can present open knowledge graphs [10]. This is a natural result of the language game paradigm. Language games are not limited to someone directing others to make specific gestures or postures. They also include predicting results under specific conditions by observing certain regular phenomena (such as the reactions of different metals with acids). In reinforcement learning tasks, large language models can extract knowledge about actions (how to do) and goals (what to do) from the current dialogue context [11]. This study also demonstrates that LLMs can appropriately balance pre-trained existing knowledge with new knowledge generated through dialogue. This behavior of modifying their cognitive model based on dialogue can also be seen as the “language practice” described by Wittgenstein. This feature is also reflected in the use of large models to play board games like Werewolf and Avalon.

▷ Figure 7. Related world knowledge for task χ enhances the characteristics of existing knowledge χ to improve the final prediction y. Source: Learning beyond datasets: Knowledge Graph Augmented Neural Networks for Natural language Processing.

For NLP researchers, the concept of language games means abandoning the idea that “understanding language can exist independently of its function and produce purely objective understanding.” Current NLP benchmark tasks attempt to decompose “understanding” into manageable, evaluable units, often involving prediction, string mapping, or classification (such as sentiment analysis, question answering, word sense disambiguation, coreference resolution, etc.), as shown in SuperGLUE or BIGbench benchmarks.

However, whether these tasks truly “use language” remains debatable. Researchers behind these test paradigms often assume that there is a way to understand language that transcends actual usage, believing that understanding language merely involves mapping certain linguistic units to other units, rarely combining language with actual activities. Perhaps a better goal is not merely to create machines with “language understanding” capabilities but to explore whether intelligent agents can change other interlocutors’ evaluations and decisions through language, as exemplified by the earlier instances of decomposing robot tasks and balancing the influence of existing knowledge through language models.

4. Conclusion

Philosophers’ ideas often precede their times, especially those of geniuses. Many of the problems encountered in the field of contemporary natural language processing can find inspiration, albeit vague yet incisive, in Wittgenstein’s numerous manuscripts and notes. For example, the concept of family resemblance can lay the foundation for understanding, modeling, and constructing natural language systems that accurately describe the complexity of language. However, current researchers should not be content with theoretical deliberations alone; empirical research is necessary to validate or refute these philosophical hypotheses. As discussed earlier, the author holds an open attitude towards the existence of private language, considering the possibility of semi-private language as an intermediate state.

“The limits of our language are the limits of our cognition.” This idea is particularly relevant in contemporary large models, whose autoregressive nature makes it difficult for them to define their own boundaries, leading to issues such as hallucinations and difficulty in completing tasks involving self-reference, such as “constructing a sentence with ten words.” Wittgenstein’s philosophy will teach us humility until current NLP can fully capture the entire complexity of language. His early work, Tractatus Logico-Philosophicus, emphasizes that “the limits of language are determined by the basic propositions, and the application of logic determines which propositions are basic. Logic cannot foresee what lies in its application,” thereby opposing discussions on beauty, religion, ethics, etc., and “defining all those things many people are muttering about by maintaining silence on them.”

Some current researchers of artificial general intelligence (AGI) seem to have forgotten this lesson, focusing on areas lacking support from basic propositions, such as consciousness and superintelligence. This approach aligns with Wittgenstein’s later thoughts, which brought the concept of language down from an idealized abstract level to the practical, rough ground. After realizing that some things cannot be spoken of, our task may not be to avoid them, but rather to continually explore those unknown and inexpressible boundaries through a series of specific multimodal language tasks, such as “interactive completion,” “survival,” and “seeking happiness.” This process is also the beginning of endless progress.

Robert Hagstrom wrote in The Last Liberal Art: “The meaning of words is defined by their function in any language game. Wittgenstein did not believe in an omnipotent, independent logic existing in the world apart from what we observe but took a step back and considered that the world we see is defined and given meaning by the words we choose. In short, the world is created by us.”

Now, it is no longer just humans constructing our world but a collaboration between humans and machines, with machines playing an increasingly significant role in this process. We need to cautiously understand and regulate representative generative AI systems, such as large language models and vision models, as they shape our cultural environment and influence our narratives. Each of us lives in a world we help create, and the mechanism of creation has now been updated. We are standing at a new starting point. No one has the freedom to force everything into a predefined mold. Be true to oneself—ultimately, as Kant said, people must “legislate for nature” by understanding and participating in discussions related to large models.