Consider that this phenomenon is ubiquitous in daily life: scientists gain new insights through thought experiments, drivers discover how to navigate around obstacles through mental simulation, or writers acquire new knowledge while trying to express their ideas. In these examples, learning occurs without any new external input. With the advancement of computer science, the LbT phenomenon has become increasingly pronounced.

For instance, GPT-4 corrected a misunderstanding during its explanation process and arrived at the correct conclusion without any external feedback. Similarly, when large language models (LLMs) are prompted to “think step-by-step” or simulate a chain-of-thought [1], they can provide more accurate answers without external corrections.

▷ Screenshot of a GPT-4 conversation. Source: GPT

However, LbT is often considered a “paradox.” On one hand, in a certain sense, the learner does not acquire new information—they can only utilize elements that already exist in their mind. On the other hand, learning does indeed occur—the learner gains new knowledge (such as the answer to a math problem) or new abilities (such as the capacity to answer new questions or perform new reasoning).

Recently, Professor Tania Lombrozo from the Concept and Cognition Laboratory at Princeton University’s Department of Psychology published a review in Trends in Cognitive Sciences that offers a solution to this paradox [2]. By analyzing four specific modes of learning—explaining, simulating, comparing, and reasoning—the article reveals the similar computational problems and solutions underlying both human and artificial intelligence. Both leverage the re-representation of existing information to support more reliable reasoning. She concludes that, as resource-limited systems like humans, LbT helps us extract and process necessary information according to current needs without constantly generating new information.

So, can the results produced by this almost “fantasizing” form of learning be considered “truth”? Before drawing a conclusion, let us first review the evidence supporting LbT.

▷ Lombrozo, Tania. “Learning by thinking in natural and artificial minds.” Trends in Cognitive Sciences (2024).

1. Four Typical Modes of Learning by Thinking

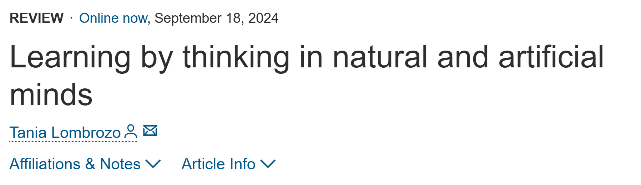

Let us first explore four instances of LbT: learning through explaining, simulating, analogical reasoning, and reasoning. Of course, LbT is not limited to these four modes—on one hand, there are other forms of LbT, such as learning through imagination; on the other hand, these learning modes can be further subdivided. For example, when discussing learning through explaining, there is a distinction between teleological explanations and mechanistic explanations.

In fact, due to the intricate relationships between the learning process and other cognitive mechanisms such as attention and memory, it is challenging to categorize learning fundamentally. Instead, the four learning processes we discuss help us understand the ubiquity and strengths of LbT. This allows for parallel comparisons between human thinking and artificial intelligence.

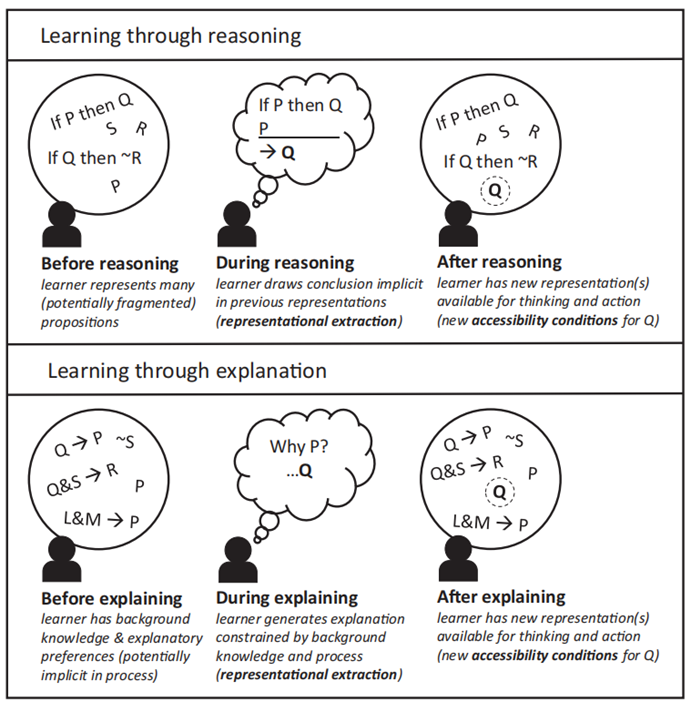

▷ Figure 1. Different Types of Learning. Source: [2]

(1) Learning through Explaining

In a classic study [3], researchers found that “high-achieving” students employed different strategies compared to “low-achieving” students when learning material. High-achieving students tended to spend more time explaining the text and examples to themselves. Subsequent studies that prompted or guided students to engage in explanations showed that explanatory learning indeed enhanced learning outcomes, particularly for questions that extended beyond the learning material itself. When external feedback is absent during the learning process, explanatory learning becomes a form of LbT. This learning mode can be divided into two categories: corrective learning and generative learning.

In corrective learning, learners identify and improve flaws in their existing representations. For example, the phenomenon known as the “illusion of explanatory depth (IOED)” demonstrates that people often overestimate their understanding of how devices work. It is only after attempting to explain that they become aware of the limitations of their understanding [4].

Generative learning refers to the process of learners constructing new representations through explanation. For instance, when learning new categories, participants who were prompted to explain were more likely to generate abstract representations and identify broad patterns within examples.

A similar approach is applied in AI research, where AI systems generalize by generating self-explanations that extract information from training sets. Recent studies have also shown that deep reinforcement learning systems that generate natural language explanations alongside task answers perform better in relational and causal reasoning tasks than systems that do not generate explanations or those that use explanations as input. These systems do not rely solely on simple features but can summarize generalizable information from complex data.

▷ Source: GPT

(2) Learning through Simulating

Imagine three gears arranged horizontally. If the leftmost gear rotates clockwise, in which direction will the rightmost gear turn?

Most people would solve this problem through “mental simulation,” constructing a mental image of the gears’ rotation. Throughout the history of science, thought experiments in many fields have been classic examples of mental simulation. For example, Einstein explored relativity by simulating the movement of photons and trains, while Galileo studied gravity by simulating the process of objects falling. These mental simulations and thought experiments provide profound insights without relying on new external data.

Similar to explanatory learning, mental simulation can be either corrective or generative.

A corrective example of mental simulation involves a study where participants engaged in two types of thought experiments simultaneously—one aligning with Newtonian mechanics intuition and the other inducing erroneous dynamic thinking (e.g., believing that objects require continuous force to remain in motion). These conflicting thought experiments led participants to sometimes make correct judgments based on Newtonian mechanics and at other times be misled by dynamic reasoning. However, after the experiment, participants corrected their initial misconceptions through thought experiments and no longer supported incorrect judgments related to dynamic reasoning.

An example of generative mental simulation is found in causal reasoning. When determining whether one event causes another, people often engage in “counterfactual simulation.” For instance, in an experiment where the first ball hits the second ball, altering its trajectory to reach a target, participants would simulate what would happen if the first ball had not hit the second ball, thereby assessing the causal relationship.

Similar simulation processes are widely applied in the AI field. For example, in deep reinforcement learning, model-based training methods use environmental representations to generate data for training policies, which closely resemble human mental simulations. Some AI algorithms approximate optimal solutions by simulating multiple decision sequences. In both humans and AI, simulations generate “data” that provide essential input for learning and reasoning.

▷ Source: Corey Brickley

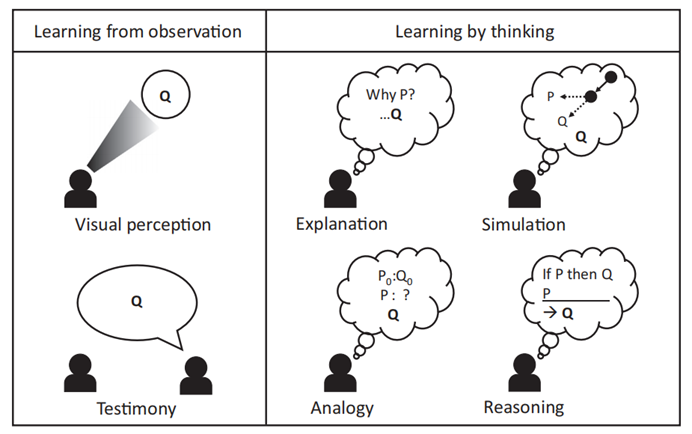

(3) Learning through Analogical Reasoning and Comparison

When constructing the theory of natural selection, Darwin analogized artificial selection to biological evolution, thereby deducing the mechanism of variation in natural selection. Such analogical reasoning is common in scientific research, especially when researchers have some understanding of both objects being compared. Through analogical thinking, new insights can emerge, supporting “learning by thinking.”

Learning through analogy does not rely entirely on autonomous thinking but also incorporates external information. Consequently, the learning outcomes reflect both the information provided by the researcher and the participant’s analogical reasoning abilities. However, some studies isolate the impact of analogical thinking by providing all participants with the same analogical information, while only some are prompted to use this information when solving new problems.

For example, a study on mathematical learning showed that the more participants were reminded to compare samples, the less likely they were to be misled by superficial similarities in problems, demonstrating the corrective effect of analogical learning. Another experiment required participants to identify similarities and differences between two groups of robots. The results showed that only participants who actively engaged in comparisons were more likely to discover subtle rules, illustrating the generative effect of analogical thinking.

Analogical reasoning has also attracted attention in the field of artificial intelligence. Similar to human experiments, most demonstrations of analogical reasoning in AI are not purely LbT; instead, AI systems are often required to solve problems related to source analogies. However, recent research indicates that machines can construct analogies through their own thinking or knowledge even without provided source analogies [4]. In tasks such as mathematical problem-solving and code generation, the most effective prompts involve asking large language models (LLMs) to generate multiple related but diverse examples, describe each example, and explain their solutions before providing solutions to new problems. This process likely integrates reasoning and explanation. Such analogical prompts outperform many state-of-the-art LLM performance benchmarks and show some similarity to human learning outcomes under comparison prompts.

Through analogical reasoning, not only can errors be corrected, but deeper levels of thinking can be stimulated, leading to the discovery of new concepts or rules. This is an important learning mechanism both in human learning and artificial intelligence.

▷ Source: NGS ART DEPT

(4) Learning through Reasoning

Even seemingly simple reasoning processes require accurate information and logical processing. For example, I might know that today is Wednesday and also know that “if today is Wednesday, I should not park on a certain campus,” but for various reasons, I might overlook this logical relationship and mistakenly park in a no-parking zone. This illustrates that even when logic holds, additional attention and processing capacity are needed during reasoning.

More complex reasoning requires deeper thinking. For instance:

Premise 1: “Everyone loves anyone whom they have ever loved.”

Premise 2: “My neighbor Sarah loves Taylor Swift.”

Conclusion: “Therefore, Donald Trump loves Kamala Harris.”

In reality, such a reasoning conclusion might be difficult to accept, indicating that effective reasoning requires not only logical deduction but also reflection and practical judgment.

Reasoning can also play a corrective role [4]. In one study, participants evaluated the arguments for reasoning problem answers without knowing that these arguments came from their own previous responses. The results showed that two-thirds of the participants were able to correctly reject their own previously invalid reasoning. This type of corrective reasoning plays a crucial role in recognizing erroneous intuitions. For example, in the classic “Cognitive Reflection Test (CRT)” question “The bat and the ball”: the total price of the bat and ball is $1.10, and the bat costs $1.00 more than the ball. The intuitive answer is $0.10 for the ball, but the correct answer is $0.05. Reasoning to the correct answer requires overcoming the initial intuitive response and engaging in more rigorous thinking.

▷ Source: mannhowie.com

In the field of artificial intelligence, traditional symbolic reasoning architectures typically rely on explicit rules or probabilistic calculations. In contrast, the reasoning capabilities of deep learning systems (such as large language models) are still developing. When solving complex problems, prompting LLMs to engage in step-by-step reasoning to overcome error tendencies in direct prompting has proven more effective. This mode of reasoning is particularly advantageous for handling high-difficulty tasks, showcasing the potential of AI in the domain of reasoning.

2. Unraveling the Mysteries of Learning by Thinking (LbT)

In the preceding sections, we explored how natural and machine brains learn through thinking. Although their operational mechanisms differ, both face the same fundamental question: Why can thinking itself facilitate learning?

Heinrich von Kleist, a poet and playwright, once discussed the benefits of learning by speaking—by expressing our thoughts to others, we can clarify and develop our ideas in the workshop of reasoning. He also proposed the thinking is learning approach to explain the LbT paradox: what is being learned is not our individual self, but a specific state within our minds. In other words, learning in LbT does not involve creating entirely new knowledge but making existing knowledge accessible. Thinking can serve as a source of learning because the foundational knowledge already exists in our minds, even if we are not consciously aware of it.

From a cognitive perspective, the process of learning by reasoning involves the learner combining two premises to derive a conclusion that is logically valid but not explicitly recognized. Although the conclusion is already embedded within the premises, it is only through the reasoning process that the conclusion becomes apparent, thereby constituting learning. In this process, reasoning is not merely a mechanical combination of information; it also generates a new representation that exists independently of the premises.

▷ Source: Caleb Berciunas

Applying this concept to learning by explaining introduces greater complexity. During the explanation process, learners often do not clearly identify what constitutes an explicit premise, making the accessibility of representations particularly crucial. Different types of input can affect the extraction of representations. For example, when dealing with addition, whether the input is in Arabic numerals or Roman numerals significantly influences our perception of input features (such as parity) and can alter accessibility conditions through different algorithms (such as explaining, comparing, and simulating). This supports representational extraction, creating entirely new representations with new accessibility conditions.

Representational extraction refers to the formation of a specific cognitive structure through thinking or reasoning, which possesses different accessibility conditions. Once a representation is extracted, it becomes a premise that subsequently limits the scope of output.

This is particularly evident in learning by explaining—when learners engage in explanations, they tend to select explanations with fewer root causes. In other words, they are more inclined to consider certain causal hypotheses as more reasonable and superior to others. Such selections create implicit premises that restrict the space for subsequent reasoning. For instance, when learners develop a preference for a particular explanation, this preference itself constitutes a new premise, thereby influencing the conclusions drawn thereafter.

▷ Action of Thinking through Reasoning and Explaining. Source: [2]

Understanding the differences in accessibility and the role of representational extraction helps us extend the logic of reasoning-based learning to other learning forms, such as explaining, comparing, and simulating. The cognitive processes in each form can be seen as continuously extracting new representations and establishing new reasoning premises, thereby progressively advancing the learning process. However, this does not resolve another issue: Why should we expect conclusions derived from explanations, simulations, or other LbT processes to generate new knowledge, that is, true and factual knowledge?

Of course, the outputs of LbT are not always correct. However, because these processes may be influenced by evolution, experience, or the design of artificial intelligence, we can reasonably assume that LbT at least partially reflects the structure of the world, making it possible to produce relatively reliable conclusions [5]. Even if the outputs are not entirely accurate, they can still guide thinking and actions. For example, when learning through explaining, even if the generated explanations are incorrect, the explanation process itself may improve subsequent inquiries and judgments, such as helping learners identify conflicts between representations or represent a domain in a more abstract manner.

Having understood the mechanisms and potential value of LbT, the next question is: Why is LbT necessary?

We can explain this through an analogy with computer systems. In artificial systems, limitations in memory and processing time determine how much prospective computation can be performed. LbT provides a way to generate novel and useful representations on demand, rather than solely relying on existing learning outcomes. Therefore, it can be assumed that LbT is particularly prevalent in intelligent agents with limited resources (such as time and computational power) [6], especially when facing uncertainties in future environments and goals.

These observations also suggest differences in the role of LbT between natural and artificial thinking. As artificial intelligence gradually overcomes the resource limitations of human thinking or when it deals with problems that do not involve high uncertainty (such as operating in very narrow domains), we anticipate significant differences in how AI and humans perform in LbT processes under these conditions.

3. Conclusion

Learning by Thinking (LbT) is ubiquitous: humans acquire knowledge not only through observation but also through methods such as explaining, comparing, simulating, and reasoning. Recent studies have shown that artificial intelligence systems can also learn in similar ways. In both contexts, we can resolve the LbT paradox by recognizing that the accessibility conditions of representations change. LbT enables learners to extract representations with new accessibility conditions and use these representations to generate new knowledge and abilities.

In a sense, LbT reveals the limitations of cognition. A system with unlimited resources and facing limited uncertainty could compute all consequences immediately through observation. However, in reality, both natural and artificial intelligence face limitations in resources and high uncertainty related to future judgments and decision-making. In this context, LbT provides a mechanism for on-demand learning. It makes full use of existing representations to address the environment and goals currently faced by the agent.

Nevertheless, there remain many unresolved mysteries regarding the implementation of LbT in natural and artificial intelligence. We do not fully understand how these processes specifically promote the development of human intelligence or under what circumstances they might lead us astray. Uncovering the answers to these questions requires not only deep thinking but also the support of an interdisciplinary cognitive science toolkit.

[1] WEI J, WANG X, SCHUURMANS D, et al. Chain of Thought Prompting Elicits Reasoning in Large Language Models [J]. ArXiv, 2022, abs/2201.11903.

[1] WEI J, WANG X, SCHUURMANS D, et al. Chain of Thought Prompting Elicits Reasoning in Large Language Models [J]. ArXiv, 2022, abs/2201.11903.

[2] Lombrozo, Tania. “Learning by thinking in natural and artificial minds.” Trends in cognitive sciences, S1364-6613(24)00191-8. 20 Aug. 2024, doi:10.1016/j.tics.2024.07.007

[3] CHI M T H, BASSOK M, LEWIS M W, et al. Self-Explanations: How Students Study and Use Examples in Learning to Solve Problems [J]. Cognitive Science, 1989, 13(2): 145-82.

[4] ROZENBLIT L, KEIL F. The misunderstood limits of folk science: an illusion of explanatory depth [J]. Cognitive Science, 2002, 26(5): 521-62.

[5] TESSER A. Self-Generated Attitude Change11Preparation of this chapter was partially supported by grants from the National Science Foundation (SOC 74-13925) and the National Institutes of Mental Health (1 F32 MH05802-01). Some of the work was completed while the author was a Visiting Fellow at Yale University. I am indebted to Robert Abelson and Claudia Cowan for reading and commenting on preliminary versions of this chapter [M]//BERKOWITZ L. Advances in Experimental Social Psychology. Academic Press. 1978: 289-338.

[6] BERKE M D, WALTER-TERRILL R, JARA-ETTINGER J, et al. Flexible Goals Require that Inflexible Perceptual Systems Produce Veridical Representations: Implications for Realism as Revealed by Evolutionary Simulations [J]. Cogn Sci, 2022, 46(10): e13195.

[7] GRIFFITHS T L. Understanding Human Intelligence through Human Limitations [J]. Trends in Cognitive Sciences, 2020, 24(11): 873-83.