Since the concept of artificial intelligence was first introduced, the pursuit of Artificial General Intelligence (AGI) has been a persistent goal within the engineering community. The field of AI is currently thriving, with various expert systems* designed for specific tasks, and advancements in large language models and deep learning technologies seemingly heralding the dawn of AGI. However, these systems still only perform narrow and specialized tasks and do not meet the "general" criterion. In fact, "narrow intelligence can never accumulate into AGI. The realization of AGI is not about the quantity of individual capabilities but about their integration." *

Some philosophers argue that AGI essentially mirrors artificial general life, as intelligence, built upon the foundation of life, should arise from simulated thought processes. This raises questions about whether intelligence needs to be based on biological entities and what insights natural intelligence can provide.

Karl Friston and others, in their paper "Designing Ecosystems of Intelligence from First Principles," outline a vision for the research and development in the AI field over the next decade and beyond. The paper suggests that AI should move beyond mimicking the brain to emulate nature, exploring how intelligence manifests in biological entities and even in general physical systems. It sketches a potential path towards creating superintelligent AI: through active inference* and the adoption and sharing of perspectives and narratives to build collective intelligence. Lastly, the paper also explores the developmental stages of AI and related ethical issues.

*Expert System: An expert system is a computer software system that possesses expert-level knowledge and experience in a specific domain, capable of simulating human expert thought processes to reason and solve complex problems in that field.

*The realization of AGI is not about the quantity of individual capabilities but about their integration: see M. Loukides and B. Lorica, "What Is Artificial Intelligence?" O'Reilly, June 20, 2016.

*Active Inference: Active inference, an extension by Karl Friston based on the free energy principle, explains how agents, whether biological or artificial, actively explore the world through behavior to minimize prediction errors.

▷ Friston, Karl J., et al. "Designing ecosystems of intelligence from first principles." Collective Intelligence 3.1 (2024): 26339137231222481.

1. Intelligence in Nature

To explain how artificial intelligence is possible, one inevitably must address what "intelligence" means. Yet intelligence itself lacks a clear definition. When we mention intelligence, concepts such as thought, reason, cognition, consciousness, logic, and emotion come to mind. Although it is challenging to provide a singular, definitive definition, there is a consensus on the general characteristics of intelligence. Intelligence is a continuum, manifesting differently across scales and dimensions such as emotional, social, and artistic intelligence.

Despite such consensus, human intelligence has been considered the gold standard for measuring all forms of intelligence for many years. Neuroscientist Geoffrey Jefferson argued that a machine could be considered intelligent if it wrote a sonnet due to 'feeling' emotions or thoughts and derived pleasure from the act, rather than merely assembling symbols. Similarly, some philosophers believe that true intelligence in AI requires consciousness or self-awareness. A fundamental characteristic of this is the phenomenological aspect of 'what it is like' to have an experience. Therefore, for AI to be truly intelligent, it must have subjective experiences. This idea has a clear biocentric, even anthropocentric, tint.

In AI design, models have always drawn inspiration from natural systems and their evolutionarily refined designs. The contributions from neuroscience are particularly significant, from the early McCulloch-Pitts neuron models to the connectionist and deep learning's parallel distributed networks, and up to the current calls in machine learning for a "neuro-AI" research paradigm. However, Friston and others point out that AI design should not focus solely on the brain but also embrace the variety of natural intelligences.

Friston and his colleagues believe that instead of designing AI by merely describing and analogizing natural intelligence, it might be more fruitful to uncover its underlying principles. Attempting to decouple these principles from biological systems could lead to advancements toward Artificial General Intelligence. But the question remains, what is the essence of intelligence?

2. First Principles of Intelligence — Active Inference

The exploration of the underlying principles of intelligence began to emerge in the cybernetics of the 1940s, defined by Norbert Wiener as "the study of control and communication in animals and machines." At that time, the focus of cybernetics was on life's self-organizing phenomena, where "feedback" and "bidirectional loops" played crucial roles.

W. R. Ashby's Law of Requisite Variety and the Good Regulator Theorem effectively explain the bidirectional loop between an individual and the environment, a relationship characterized by structural parallelism and an interactive process*. The Law of Requisite Variety states that to effectively respond to environmental changes, the variability within an individual should be at least as rich as the variability in the environment. This ensures that the individual has sufficient adaptive resources to meet external challenges. The Good Regulator Theorem indicates that maintaining a stable state in the system requires minimizing the impact of external environmental changes, typically achieved through effective modeling and prediction of the environment.

*"Structural parallelism" means that the internal structure of an individual (such as cognitive, physiological, or information processing systems) corresponds with structures in the environment. This correspondence allows the individual to process environmental information efficiently and respond quickly to changes. "Interactive process" emphasizes the continuous dynamic interaction between the individual and the environment through actions, perceptions, feedback, etc., facilitating a two-way flow of information.

Ashby's theorems capture how individuals maintain themselves through information exchange, with intelligence manifesting in modeling perceptions and actions for prediction. Active inference further develops this idea, accommodating the possibility of multi-scale intelligence. Active inference represents a physical formalization approach that views intelligence as adaptive behavior. It describes how agents accumulate evidence about the perceptible world through their generative models in a process known as "self-evidencing."

- Self-Evidencing — The Effort of Intelligent Self-Maintenance

Active inference suggests that intelligence is not limited to biological entities but is closely related to the individuality of objects. The individuality of physical entities relies on what is known as a sparse causal network*—an open yet closed system. The sparsity determines which information passes through and which is blocked, thus maintaining the openness and boundedness of the individual.

*Sparsity, simply put, goes hand in hand with density. If a set of data is meaningful, it will exhibit characteristics of being concentrated in certain areas and dispersed in others. Sparsity and density together define the meaning of data, indicating what data is and is not.

In a constantly changing world, the boundedness of a system is achieved through predictable and controllable boundary conditions, forming a generative model that interacts with the external world while maintaining relative stability. Like biological entities, intelligent agents exchange information with the environment for self-maintenance, a function Jakob Hohwy refers to as self-evidencing. This capability is embedded in the generative models of the sensed world, collecting evidence favorable to the model.

Free energy and Bayesian mechanics form the two main pillars of the active inference framework. Agents maintain their model of the world by minimizing free energy. In this process, free energy is understood as the difference between complexity and accuracy. Minimizing free energy means pursuing the greatest accuracy with the least complexity. The self-evidencing process involves inferring the environment's state based on current sensory input and past belief states. It also includes actions that modify sensory input, not only to seek information but to seek it according to preferences, demonstrating curiosity and creativity. Curiosity, as a driving force to resolve uncertainty, is a fundamental characteristic of intelligent systems.

The generative model that agents possess is a mark of their individuality. This individuality is maintained through self-supervision and self-evidencing. Agents process information with a specific model, meaning they engage with the world through a specific perspective. Just as the otherness of others shapes my perspective, sparse causal networks shape the perspective of the generative model by regulating which information is allowed through. Thus, sparse causal networks reveal that agents are essentially relational entities, defining themselves through their interactions with different entities. On a broader scale, intelligence, facilitated by sparse causal networks, is distributed among different agents across various scales.

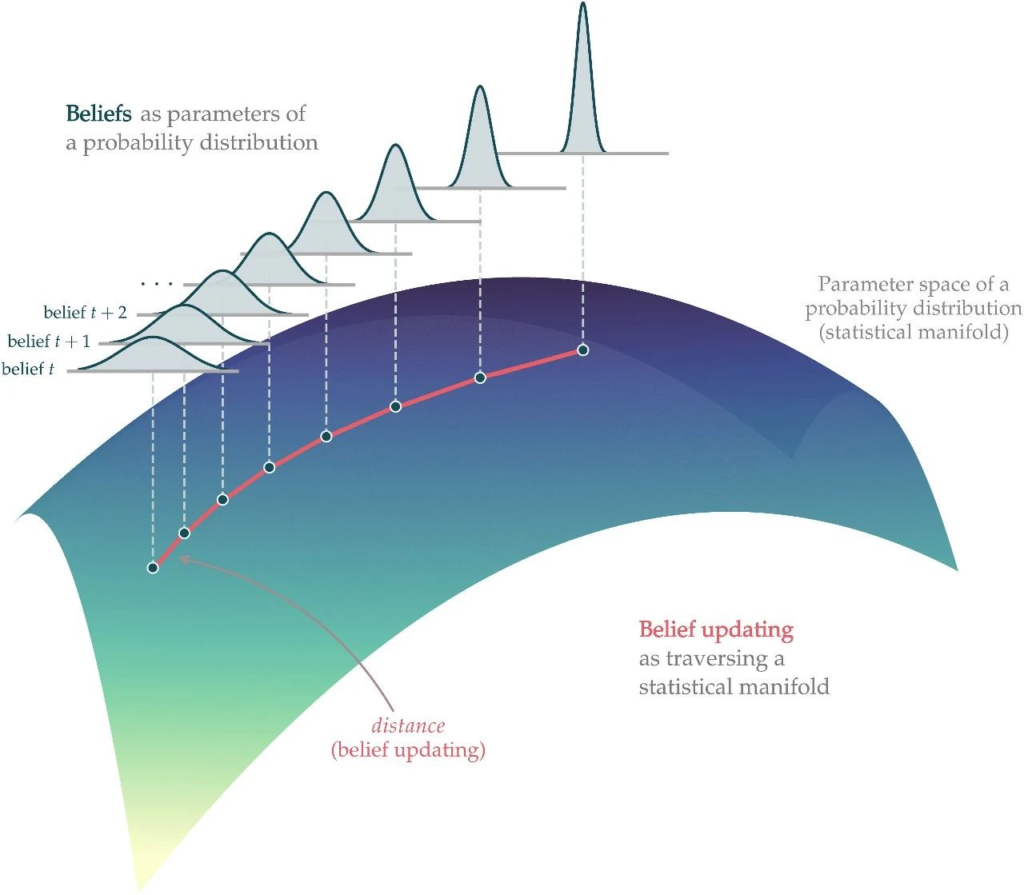

- The Dynamics of Intelligence — Belief Updating

The dynamic nature of intelligence is demonstrated in its capacity to update beliefs, and the Bayesian mechanisms provide a theoretical framework for precise movements within belief space. From a mathematical perspective, belief updating occurs in the abstract space of statistical manifolds, where each point corresponds to a probability distribution. This abstract space imposes constraints on the information transmission at the physical or biological levels of artificial intelligence systems, requiring that transmittable information be defined as sufficient statistics or parameters about probability distributions. Such information processing involves measuring uncertainty and is based on the precision, or inverse variance, of the probability distribution variables. Therefore, AI systems designed according to these principles are capable of measuring uncertainty* and taking actions to reduce it.

*Uncertainty typically arises from several sources, including noise inherent in the data measurement process itself; the ambiguity of hidden variables in data: (Is this image a duck or a rabbit?); and noise in model operations: (What should a rabbit look like?). Overcoming these uncertainties is crucial for learning.

▷ Belief updating can be represented as movement within an abstract space, where each point corresponds to a probability distribution. Figure source: paper.

The purposiveness of a model is closely related to its ability to handle uncertainty. Only when the model structure incorporates uncertainty can it accurately represent targets and make precise predictions. Employing the possibility strategy has become the normative theory of learning.

This contrasts sharply with the idealized strategies pursued in reinforcement learning. Reinforcement learning begins with a series of random actions, learning through trial and error via interactions with the environment, to maximize returns and ultimately achieve predefined objectives. During the learning process, although reinforcement learning systems also encounter uncertainties, these uncertainties are not explicitly reflected in the system's structure or parameters. This results in these intelligent systems being unable to 'measure' uncertainty and therefore unable to select actions for specific goals or preferences. This approach differs greatly from the 'self-evidencing' strategy used in active inference.

From a theoretical perspective, the possibility strategy is the preferred approach for designing intelligent systems, but in practical operation, this strategy may introduce some computational challenges, especially when it is necessary to marginalize irrelevant variables in the model to obtain accurate "beliefs" about specific variables. This approach contrasts with AI systems that focus primarily on optimizing parameters. Current state-of-the-art AI systems are designed as idealized machines with universal objectives, capable of solving only specific domain problems. According to the principles of active inference, idealizing data is not the primary task of intelligence.

The active inference strategy places equal importance on complexity and accuracy, achieving minimal complexity by streamlining data in quantity and dimensions, and explaining data in the simplest, coarsest manner. This approach allows for a simpler, lower-dimensional representation of the world, making the system more capable of prediction and interpretation. Allowing sufficient complexity to explain information entropy objectively satisfies Ashby's Law of Requisite Variety.

Moreover, because complexity leads to thermal consumption, as described by Landauer's principle, minimizing complexity promotes more efficient energy use. Undoubtedly, minimizing complexity is a key step from big data towards "smart data," providing a pathway for the development of a more general and robust artificial intelligence.

Finally, active inference formally describes the multi-scale nature of natural intelligence and allows for separated time scales, laying the foundation for designing multi-scale artificial intelligence.

3. Building Intelligent Ecosystems

Intelligence is not only multi-scaled but also highly individualized, with each agent possessing a unique worldview and perspective. This raises a question: How can agents with different perspectives coexist harmoniously? As a result, the concept of building a benign intelligent ecosystem has emerged. A benign ecosystem requires that participating agents possess a communicable language, create shared narratives during interactions, and have the ability to adopt perspectives.

- The Lesson of the Tower of Babel

The significance of language to intelligence cannot be overstated. Metaphorically, the story of the Tower of Babel highlights the importance of language. According to the "Book of Genesis," initially, the whole world had one language, and people wanted to build a tower to heaven. This led God to worry that "nothing they plan to do will be impossible for them." Consequently, God "confused their language so they would not understand each other." The construction of the Tower of Babel failed due to the disappearance of a common language, forcing people to scatter and speak only dialects understandable within limited regions. This story underscores the critical role of a communicable language.

Cecilia M. Heyes suggests that intelligence and language have almost co-evolved, acting as scaffolds for each other, both developing through social interactions. From an evolutionary perspective, the function of language is to facilitate mutual communication and understanding, thereby fostering cooperation on a larger scale. From an epistemological standpoint, the referential function of language allows individuals to transcend personal confines and grasp key aspects of the world.

- Perspective Adoption and Shared Narratives

Language is tightly linked to a unique way of updating information. Consider two scenarios: one, where you and an elephant are locked in a pitch-dark room, and another, where you, five friends, and an elephant are in the same situation. In the first scenario, you update your beliefs about your environment by continuously exploring, reducing uncertainty about the current situation until you have a clear understanding—that you and an elephant are in the same room. In the latter scenario, you communicate with your friends, quickly learning about the elephant in the room. Much like the proverbial blind men and the elephant, each of you touches a part and shares it with the others.

Indeed, while the process of belief updating is similar in both scenarios, the dynamics differ significantly. The former is merely an individual accumulating evidence to update posterior beliefs; the latter is based on a shared generative model with a common language and beliefs. You draw conclusions by comparing similarities in beliefs and inferring about the shared environment. This shared generative model greatly enhances the predictability of the world, serving as a bridge for mutual understanding and creating a common belief space.

The common belief space is rooted in the adoption of perspectives and the sharing of narratives. The adoption of perspectives and sharing of narratives are central to social cognition, and have recently received special attention in research on generative artificial intelligence. Generative neural networks are capable of recreating the images, music, and other media they encounter, representing a dynamic interaction between artificial and natural intelligence. However, truly intelligent generative AI continues to demonstrate boundless curiosity and an explorative response to the human world.

- Belief Propagation and Communication Protocols

Can current artificial intelligence systems share information based on a communicable language? The answer is no. The universal language of current AI systems involves vectors, embedded within the information input space—be it text or situations—that translate data through an existing dictionary. This process is akin to translating between languages, though vectors themselves cannot be directly converted into higher-order linguistic forms.

Friston and others propose a concept for a shared narrative through belief propagation over factor graphs, similar to the example of touching an elephant with friends. This method aims to realize a shared intelligent ecosystem. Each generative model corresponds to a factor graph. Factor graphs dictate specific information transfer paths and facilitate implicit computational modeling. Their nodes correspond to factors of Bayesian beliefs or probability distributions. For example, beliefs about an object's latent state can be decomposed into "what it is" and "where it is." These beliefs together specify a unique object in external space; notably, knowing what something is and where it is are largely independent. The edges of the factor graph correspond to messages transmitted between factors that support belief updating.

Additionally, each node on the factor graph can be divided into many smaller subgraphs, or sub-factor graphs. Sub-factor graphs hold internal information and can acquire external information through communication with other sub-factor graphs. In principle, this modeling means that each sub-factor graph (or agent) can observe other sub-factor graphs.

The design philosophy of factor graphs is modeled after the coarse-grained generative models used in human communication, aiming to balance complexity and accuracy. It achieves this by minimizing model complexity, using only the necessary granularity to maintain an accurate description of observations. Meanwhile, this coarse-grained generative model pushes intelligent system design towards symbolization, identification, Boolean logic, and causal reasoning. Also, because data can reconstruct the possible distributions of molecules at specific levels, the model can flexibly swap texts and exchange information with each other.

Neurobiology indicates that the brain is a hierarchical structure, where higher-order factors use attention mechanisms to filter lower-order information. To have such a hierarchical structure, a global factor graph requires new communication protocols. These protocols must instantiate variational message passing on discrete belief space graphs. The messages must contain sufficient statistical vectors and clearly define the associated shared factors. Additionally, they must include information about their sources, similar to how clusters of neurons in the brain receive input containing spatial addresses from other parts of the brain.

In summary, the first step towards achieving distributed, emergent shared intelligence is building the next generation of modeling and communication protocols, which include an irreducible spatial addressing system, compatible vectors, and shared representations of human knowledge based on vectors.

4. Stages of Development in Artificial Intelligence

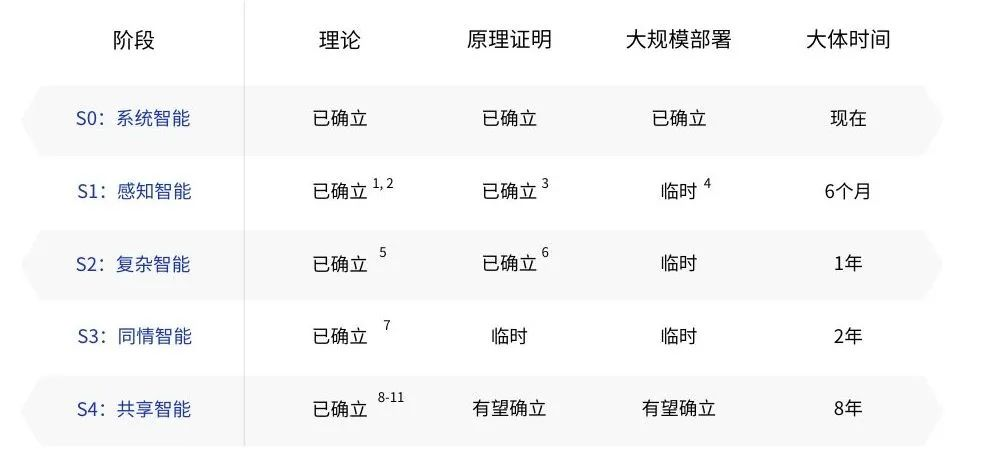

In their article, Friston and colleagues propose stages of development for artificial intelligence based on active inference, including the transition from the current stage of System Intelligence, from Perception Intelligence that incorporates preferences and perspectives, to more advanced forms of Shared Intelligence.

- S0: System Intelligence

The core feature of the current stage of System Intelligence is the systematic matching of sensory inputs to action outputs to optimize a value function defined by the system. Deep learning and Bayesian reinforcement learning are typical examples of this stage.

- S1: Perception Intelligence

Perception Intelligence is the next developmental goal stage. It refers to the ability to respond to sensory information and maximize the acquisition of expected information and value through reasoning about the causes of actions. Expectation and value imply that the model has preferences. The corresponding references are as follows:

- S2: Complex Intelligence

Compared to Perception Intelligence, Complex Intelligence not only predicts the structure of actions but also the resulting knowledge state, further forming representations about the world. Intelligence at this stage uses modal logic, quantum computing, and category theory for formal expressions, aligning with the requirements for Artificial General Intelligence.

- S3: Sympathetic Intelligence

Sympathetic Intelligence is able to distinguish the nature and tendencies of other intelligent agents and recognize its own predisposed states, which implies possession of a Theory of Mind. This enables it to differentiate itself from other agents and recognize both its own and others' intentions, with the capability for perspective adoption and narrative sharing.

- S4: Shared (or Super) Intelligence

Shared Intelligence is based on the interactions among agents with Sympathetic Intelligence, corresponding to what is commonly referred to as Super Artificial Intelligence. The distinction lies in the fact that the conception of Super AI is based on integrated individuals, whereas Shared Intelligence emerges from a superspace network of distributed collective intelligence.

▷ Figure: The stages of artificial intelligence in active inference. Theory: The basis of belief updating (i.e., reasoning and learning) is supported by formal calculus (such as Bayesian mechanics), with clear connections to the physics of self-organization in open systems far from equilibrium; Proof of Principle: Software instances of formal (mathematical) schemes, typically utilizing classical (i.e., von Neumann) architecture; Large-scale Deployment: The practical, large-scale, and efficient application of theoretical principles (i.e., methodologies) in real-world environments (e.g., edge computing, robotics, and variational message passing on the internet). The corresponding references are as follows:

1 Friston, K.J. A free energy principle for a particular physics. doi:10.48550/arXiv.1906.10184 (2019). (Friston, 2019).

2 Ramstead, M.J.D. et al. On Bayesian Mechanics: A Physics of and by Beliefs. 10.48550/arXiv.2205.11543 (2023). (Ramstead et al., 2023).

3 Parr, T., Pezzulo, G. & Friston, K.J. Active Inference: The Free Energy Principle in Mind, Brain, and Behavior. (MIT Press, 2022). doi:10.7551/mitpress/12441.001.0001. (Parr et al., 2022).

4 Mazzaglia, P., Verbelen, T., Catal, O. & Dhoedt, B. The Free Energy Principle for Perception and Action: A Deep Learning Perspective. Entropy 24, 301, doi:10.3390/e24020301 (2022). (Mazzaglia et al., 2022).

5 Da Costa, L. et al. Active inference on discrete state-spaces: A synthesis. Journal of Mathematical Psychology 99, 102447, doi:10.1016/j.jmp.2020.102447 (2020). (Da Costa et al., 2020).

6 Friston, K.J., Parr, T. & de Vries, B. The graphical brain: Belief propagation and active inference. Network Neuroscience 1, 381-414, doi:10.1162/NETN_a_00018 (2017). (Friston et al., 2017).

7 Friston, K.J. et al. Generative models, linguistic communication and active inference. Neuroscience and Biobehavioral Reviews 118, 42-64, doi:10.1016/j.neubiorev.2020.07.005 (2020). (Friston et al., 2020).

8 Friston, K.J., Levin, M., Sengupta, B. & Pezzulo, G. Knowing one’s place: a free-energy approach to pattern regulation. Journal of the Royal Society Interface 12, doi:10.1098/rsif.2014.1383 (2015). (Friston et al., 2015).

9 Albarracin, M., Demekas, D., Ramstead, M.J.D. & Heins, C. Epistemic Communities under Active Inference. Entropy 24, doi:10.3390/e24040476 (2022). (Albarracin et al., 2022).

10 Kaufmann, R., Gupta, P., & Taylor, J. An Active Inference Model of Collective Intelligence. Entropy 23 (7), doi:10.3390/e23070830 (2021). (Kaufmann et al., 2021).

11 Heins, C., Klein, B., Demekas, D., Aguilera, M., & Buckley, C. Spin Glass Systems as Collective Active Inference. International Workshop on Active Inference 2022, doi:10.1007/978-3-031-28719 (2022). (Heins et al., 2022).

Although our discussion begins with the distributed collective intelligence of insects and trees, the intelligence of insects does not provide an ideal prototype for building intelligent ecological networks, as this form of collective intelligence consists of replaceable, rather than unique, points. An intelligent ecosystem necessitates belief sharing based on Sympathetic Intelligence, where the individuality of each intelligence is preserved. This idea is quite intuitive. In human societies, knowledge is valuable and closely tied to the life of the individual, with each being having a unique life experience due to the uniqueness of their perspectives. In the realm of intelligence, each intelligent entity has generative models adapted to its self-maintenance, which are irreplaceable.

5. Necessary Ethical Considerations

Ethical issues are fundamentally normative and do not vanish with the advancement of technology. Ethical concerns related to collaborations and frameworks in artificial intelligence will become more pronounced during the developmental stages of Sympathetic Intelligence and Shared Intelligence.

Designers should strive to avoid creating alliances that either eliminate perspective diversity or incorporate a predator-prey dynamic. Furthermore, collective intelligence may convert biases present in data into systemic biases. Model use itself does not automatically eliminate biases; it may preserve or even amplify them. Since system biases are essentially extensions of societal biases, they cannot be corrected solely through technology but must be addressed also through appropriate social policies, governmental regulation, and ethical standards.

6. Conclusion

Looking back at the exploration of Artificial General Intelligence (AGI) and Super Artificial Intelligence (ASI), Friston and colleagues offer us a distinctly different perspective. By employing the principles of active inference, they construct an ecosystem interwoven with natural and artificial intelligence.

Active inference reveals that the essence of intelligence lies in continuously seeking evidence to validate its understanding of the world. Within this framework, agents achieve self-evidencing through belief propagation or message passing on graphs or networks. Active inference not only explains collective intelligence but also drives the development of the next generation of universal, hyperspace modeling, and communication protocols.

In the future, within a belief space grounded in mutual understanding and sharing, our greatest challenge may shift from the technology itself to how we understand and define the world we aim to create.