In an era in which the field of artificial intelligence is fiercely competitive, a recent project known as "the AI initiative endorsed by Bill Gates" has captured public attention. This initiative, funded by the Gates Foundation, marks the official commencement of the "Thousand Brains Project."

The concept of "Thousand Brains" is not a novel one. As early as 2005, the project's leader, Jeff Hawkins, founded Numenta, a technology company aimed at creating biologically-inspired artificial intelligence. In 2021, Hawkins published a book titled A Thousand Brains.

In many ways, the emergence of the "Thousand Brains Project" is not surprising; it represents a natural progression, merging brain science theories with artificial intelligence as technology continues to advance. However, what piques our curiosity is: why now? Why the "Thousand Brains Project"?

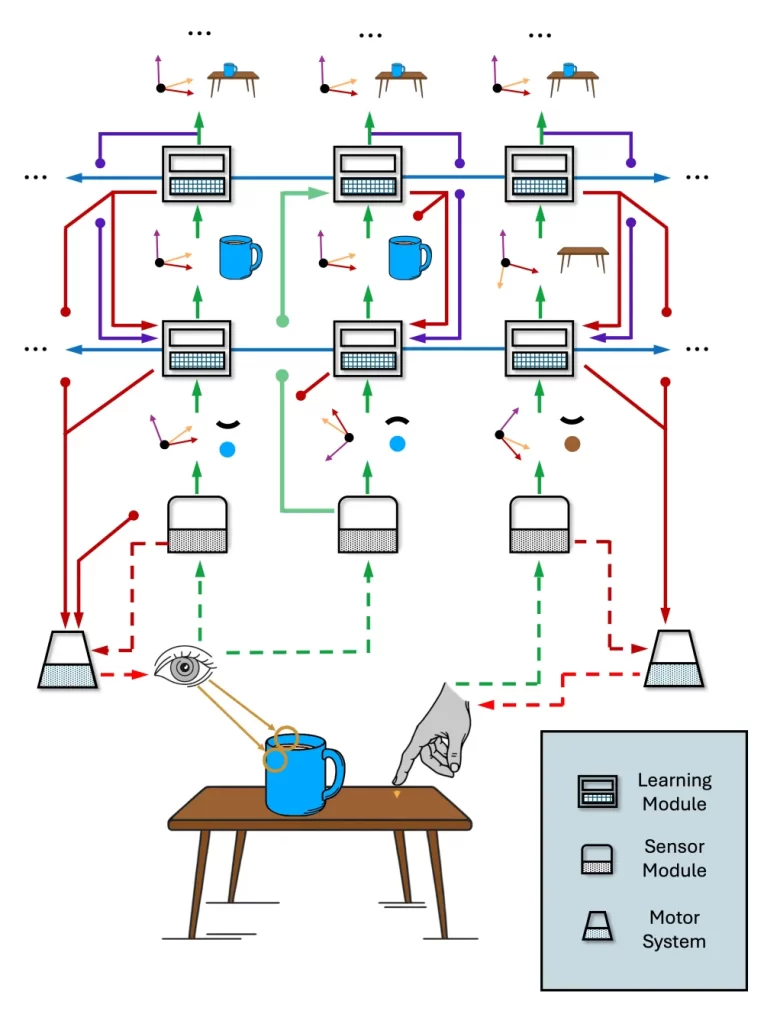

▷ An illustration from Numenta’s website provides an introduction to the Thousand Brains Project.

▷ An illustration from Numenta’s website provides an introduction to the Thousand Brains Project.

1. Why Now?

Currently, the leading force in artificial intelligence remains traditional deep neural networks. Each "neuron" in these networks operates as an independent computational unit, processing input data and working in tandem with others to solve complex problems. These networks have been widely applied in areas such as image recognition and text prediction. When a neural network contains multiple layers of neurons, it is termed a "deep" neural network. The concepts of "neurons" and the "layered" structure of these networks were originally inspired by biological brain research from the last century. However, these foundational discoveries in neuroscience, made decades ago, have led to a divergence between the fields of neuroscience and artificial intelligence as each continues its deep exploration.

Today, deep neural networks surpass human performance in many tasks, from specialized applications like skin cancer detection to complex public games. Particularly after last year's revolutionary impact of large language models on public perception, AI momentum has become unstoppable. It seems that with vast amounts of data and powerful computational capabilities, the emergence of strong AI, long fantasized about, is inevitable. However, current AI systems also reveal significant shortcomings. For example, as large language models scale, their energy consumption becomes increasingly alarming. Additionally, experiments have demonstrated that neural networks are often unstable; minor perturbations in the input can lead to chaotic results, such as misidentifying objects by altering just a single pixel.

In light of these deficiencies, researchers are beginning to explore whether alternative approaches might enable further breakthroughs in AI. The "Thousand Brains Project" seeks to develop a new AI framework by reverse engineering the cerebral cortex. Hawkins remarked, "Today's neural networks are built on the foundations of neuroscience predating 1980. Since then, we have gained new insights in neuroscience, and we hope to use this knowledge to advance artificial intelligence."

The "Thousand Brains Project" is a testament to Hawkins' perseverance and accumulated knowledge, as well as another bold attempt by humanity to explore the possibilities of artificial intelligence in this era. But what is its true value and feasibility? To answer this, we must delve deeper into its underlying principles.

2. What is the "Thousand Brains Project"?

The "Thousand Brains Project" (TBP) was officially launched on June 5th at Stanford University's Human-Centered AI Institute. Jeff Hawkins, however, had been preparing for it for many years. According to the technical manual published on Numenta’s website, the Thousand Brains Project encompasses four long-term plans.

First, the core objective of the Thousand Brains Project is to develop an intelligent sensorimotor system. Its primary long-term goal is to establish a unified platform and communication protocol for such a system. This unified interaction protocol allows different custom modules to interact through a common interface. For example, a module designed for "drone flight optimized by bird’s-eye view" and another for "controlling a smart home system with various sensors and actuators" can interact according to TBP’s rules. This framework enables users to develop new modules based on their specific needs while ensuring compatibility with existing ones.

Second, to achieve universal interaction and communication, the Thousand Brains Project draws on neuroscience research related to the cerebral cortex. The project’s name is conceptually similar to "neural networks": the cerebral cortex consists of thousands of cortical columns, each divided into multiple layers of neurons. Numenta researchers believe traditional deep networks generate a single world model, processing data step by step from simple features to complex objects. In contrast, the "Thousand Brains Theory" suggests the brain integrates multiple world models generated by many cortical columns, as if each brain were operating thousands of brains in parallel.

The second goal of the Thousand Brains Project is to foster a new form of machine intelligence that operates on principles more closely aligned with how the brain learns, differing from today's popular AI methodologies. Communication between cortical columns—or different modules—is achieved through "long-range connections," mirroring the inter-regional communication observed in the cerebral cortex. Hawkins believes this modular structure will make his approach easily scalable, similar to the critical period in brain development when cortical columns are repeatedly replicated. However, Numenta notes in the technical manual that strict adherence to all biological details is not required in practical implementation. Instead, the Thousand Brains Project draws on concepts of neocortical function and long-range connectivity rather than rigidly following neurobiological specifics.

▷ Illustration of potential implementations from the Thousand Brains Project technical manual. Detailed annotations can be found at https://www.numenta.com/wp-content/uploads/2024/06/Short_TBP_Overview.pdf

▷ Illustration of potential implementations from the Thousand Brains Project technical manual. Detailed annotations can be found at https://www.numenta.com/wp-content/uploads/2024/06/Short_TBP_Overview.pdf

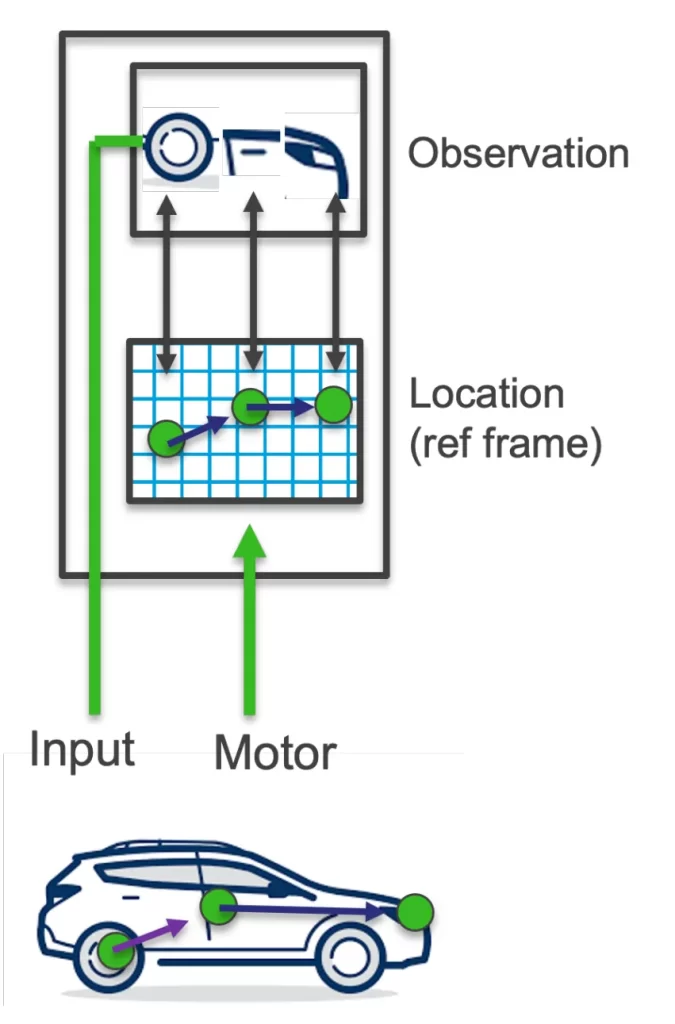

Third, the AI developed through this project will also rely on research into "reference frames" in the cerebral cortex. In mammalian brains, place cells encode positional memory, and grid cells help map positions in space. The cerebral cortex uses these reference frames to store and understand the continuous stream of sensorimotor data it receives. The Thousand Brains Project aims to integrate these neuroscience discoveries into a cohesive framework. Hawkins explains, "The brain constructs data in two-dimensional and three-dimensional coordinate systems, reproducing the structure of objects in the real world. In contrast, deep networks do not fundamentally understand the world, which is why they fail to recognize objects when we make subtle changes to an image's features. By using reference frames, the brain can understand how an object’s model changes under different conditions."

Finally, we should recognize the Thousand Brains Project as essentially a software development toolkit. The developers aim for it to handle as diverse and varied a range of tasks as possible, facilitating communication, usage, and mutual application and testing among users.

In summary, the Thousand Brains Project is fundamentally a software development toolkit for robotic sensorimotor systems, incorporating certain neuroscience principles. Hawkins mentioned that potential applications of this new AI platform might include complex computer vision systems—such as analyzing what is happening in a scene using multiple cameras—or advanced touch systems to help robots manipulate objects.

"The Gates Foundation is very interested in sensorimotor learning to promote global health," Hawkins added. "For example, when ultrasound is used to image a fetus, it builds a model by moving the sensor, which is essentially a sensorimotor problem." Therefore, the Gates Foundation’s feasibility analysis of this project is conducted more from an engineering perspective.

3. How Does the "Thousand Brains Project" Work?

As previously mentioned, the "Thousand Brains Project" (TBP) primarily relies on two brain information processing principles: "cortical columns" and "reference frames." When translated into engineering terms, these correspond to "modularization" and "reference systems" as engineering equivalents. The TBP system consists of three main modules: the sensor module, the learning module, and the actuator module. These modules are interconnected and communicate through a unified protocol, ensuring that each module has appropriate interfaces, allowing for a high degree of flexibility in their internal workings.

In an interview, Hawkins stated, "Once we learn how to construct a cortical column, we can build more at will." So, how is each module realized within the TBP?

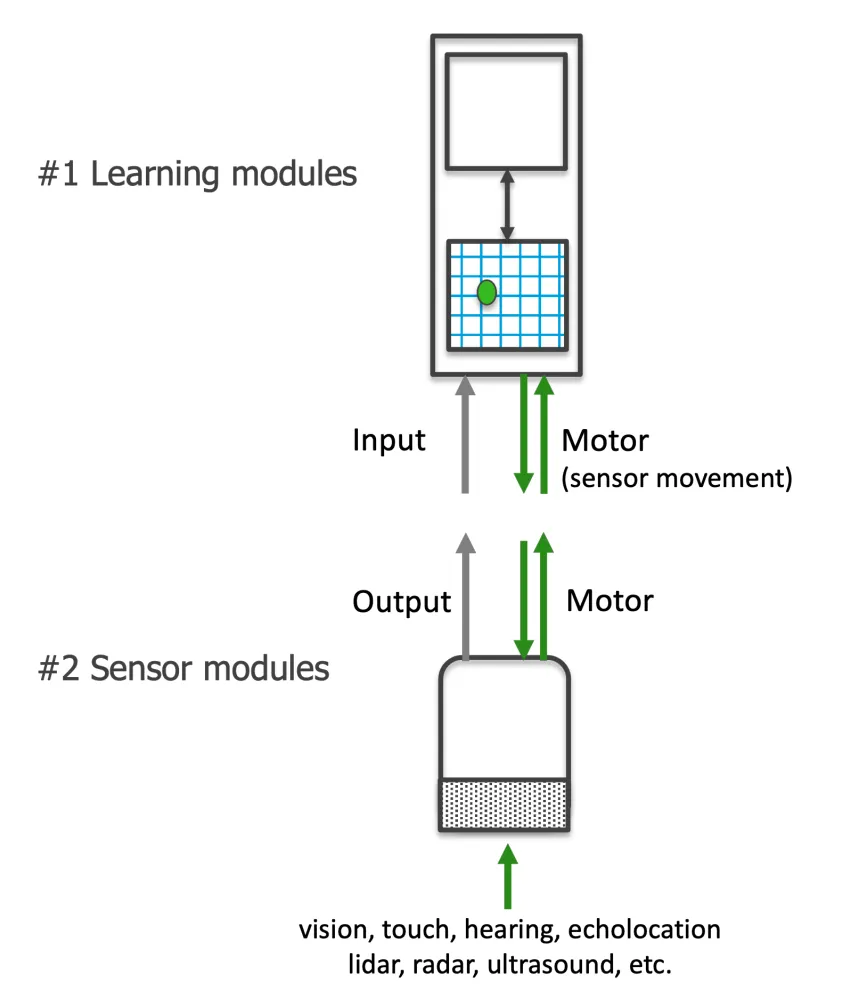

First, the sensor module is responsible for receiving and processing raw sensory input. According to the basic principles of the TBP, the processing of any specific modality (such as vision, touch, radar, or lidar) must occur within the sensor module. Each sensor module acts similarly to the retina, collecting information from a small sensory region—whether it's tactile information from a patch of skin or pressure data from a mouse's whisker. This localized raw data is converted into a unified data format by the sensor module and transmitted to the learning module, akin to how the retina converts light signals into electrical signals. Additionally, another critical function of the sensor module is coordinate transformation, which calculates the position of features relative to the sensor and the sensor relative to the "body," thereby determining the feature's position within the body's coordinate system. In summary, the sensor module transmits the current position and the external stimuli sensed at that position to the learning module in a generalized format.

▷ The sensor module receives and processes raw sensory input, which is then transmitted to the learning module through a universal communication protocol, allowing the learning module to learn and recognize models of objects in the environment.

▷ The sensor module receives and processes raw sensory input, which is then transmitted to the learning module through a universal communication protocol, allowing the learning module to learn and recognize models of objects in the environment.

The learning module is the core component of the TBP, responsible for processing and modeling sensorimotor data received from the sensor module. Each learning module operates as an independent recognition unit, and when combined, they can significantly enhance recognition efficiency (for example, identifying a "cup" by touching it with five fingers is much faster than with just one). The input to the learning module can be feature IDs from the sensor module or object IDs from lower-level learning modules, but they are still processed as feature IDs. These features or object IDs can be discrete (such as "red," "cylinder," etc.) or represented in higher-dimensional spaces (such as sparse distributed representations of color). Additionally, the learning module receives position information relative to the "body," and this reference frame, centered on the module itself, integrates space into a unified computational framework.

Based on the described feature and position information, higher-level learning modules can construct "composite objects" (such as assemblies or entire scenes). Beyond learning new models by "independently learning features" and "using a unified reference frame," the learning modules also communicate with each other using a standardized communication protocol through lateral connections. This communication, like the learning module itself, is independent of specific modalities. Therefore, learning modules under different modalities can compete or collaborate to "reach a consensus."

After independent internal computation and interaction with other learning modules, a learning module can determine an object's ID and its location. It can update the object's model using recent observations, continually expanding its understanding of the world. The TBP stresses that learning and understanding are two interwoven processes.

▷ The learning module uses reference frames to learn structured models through sensorimotor interactions. They model the relative spatial and temporal arrangement of incoming features.

▷ The learning module uses reference frames to learn structured models through sensorimotor interactions. They model the relative spatial and temporal arrangement of incoming features.

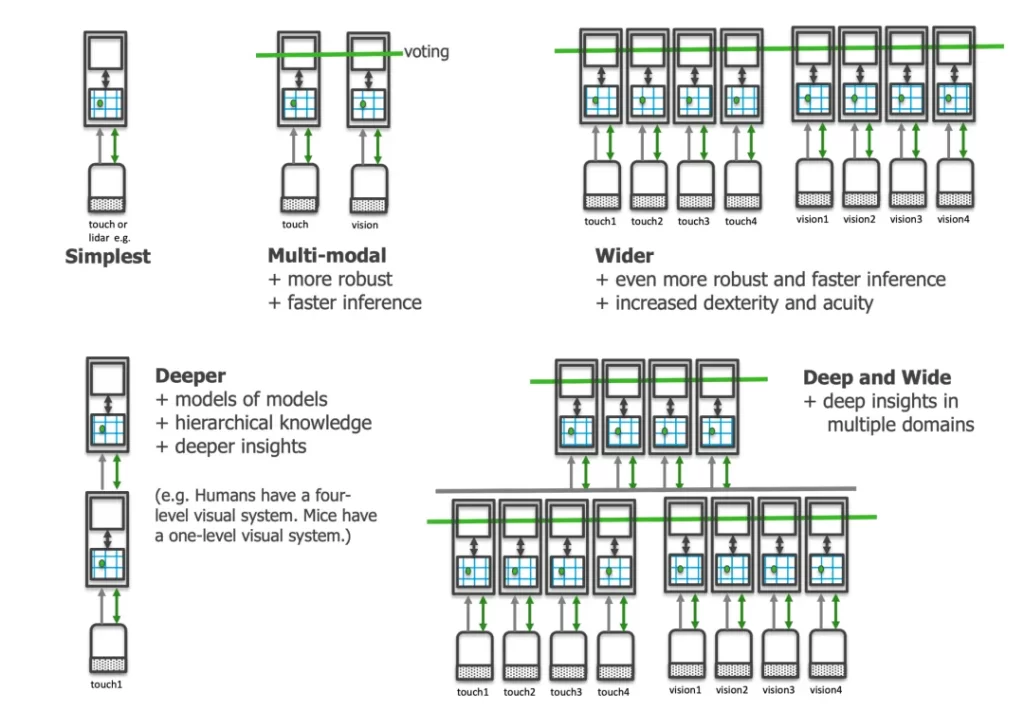

Besides the simplest connection pattern of "sensor-learning module," the system can easily expand across multiple dimensions due to the universal communication protocol. Connecting multiple learning modules horizontally can enhance system robustness through their interactions. Stacking learning modules vertically enables more complex layered inputs to handle and combine the modeling process. In addition to this cross-spatial scale learning method, the TBP can even achieve learning across different time scales: lower-level modules can slowly learn and generalize statistical features of input, while higher-level modules are used to quickly establish momentary predictions of the current external state, serving as a form of short-term memory.

Each learning module produces a motor output, which takes the form of a "target state" that follows a universal communication protocol. The target state is calculated based on the learned model and certain assumptions, aiming to minimize uncertainty between different possible object models. In other words, the target state can guide the system's behavior toward the desired goal.

▷ By using a universal communication protocol between the sensor module and the learning module, the system can easily scale across multiple dimensions. This provides a straightforward approach to processing multi-modal sensory inputs. Parallel deployment of multiple learning modules can enhance robustness through a voting mechanism among them. Additionally, stacking learning modules enables more complex hierarchical processing of inputs and facilitates modeling composite objects.

▷ By using a universal communication protocol between the sensor module and the learning module, the system can easily scale across multiple dimensions. This provides a straightforward approach to processing multi-modal sensory inputs. Parallel deployment of multiple learning modules can enhance robustness through a voting mechanism among them. Additionally, stacking learning modules enables more complex hierarchical processing of inputs and facilitates modeling composite objects.

The above description covers the basic functions and connections of the three modules; in practice, they can achieve more flexible connections and richer behaviors, as detailed in the technical manual. One particularly interesting feature is that the current learning modules can store learned models and use them to predict future observations. When these models are integrated to predict current observations, the prediction error can serve as input to update the model. This use of feedback signals is similar to patterns found in reinforcement learning, which is also reflected in the TBP.

However, the manual notes that real-time updates in the TBP predictions are not yet achievable. The future introduction of a time dimension to realize this functionality will greatly benefit object behavior encoding and motion strategy planning. For instance, this functionality could be applied to observing the continuous process of pressing a stapler or roughly simulating the physical properties of common materials.

4. Why the "Thousand Brains Project"?

Returning to the initial inquiry: in an era where both artificial intelligence and brain-machine interfaces lead technological advancements, why has the Gates Foundation decided to provide $2.96 million in funding to the "Thousand Brains Project" over the next two years? Rather than assessing the judgment of the Gates Foundation's advisory team, I would like to offer my personal views on the significance and feasibility of the "Thousand Brains Project" as a conclusion to its introduction.

The "Thousand Brains Project" is a research initiative aimed at developing the next generation of artificial intelligence systems through biomimicry and neuroscience theories. On the one hand, by mimicking the functioning of the cerebral cortex, the project aims to develop intelligent systems capable of perception, learning, and executing actions. This has profound practical significance in fields such as healthcare and public health. These technologies can not only improve the quality of life and societal health standards but also effectively address various challenges and public health crises that future societies may face. Supporting the development and application of such technologies aligns with the expectation of a more intelligent and human-centered society.

Additionally, the "Thousand Brains Project" has far-reaching implications for the future of artificial intelligence and computer technology. Current deep neural networks typically require vast labeled datasets for training and struggle with real-time adaptability to dynamic environments. In contrast, the "Thousand Brains Project" aims to introduce hierarchical and parallel processing capabilities into AI systems through biomimetic methods, enabling more flexible, intelligent, and adaptive machine intelligence. The debate over whether to incorporate principles from the biological brain into artificial intelligence has been ongoing and remains complex. Regardless of the path chosen, the key challenge lies in effective implementation.

In terms of technical execution, the "Thousand Brains Project" aims to create software-based cortical columns that can achieve complex perception and action processes, such as vision and hearing, by connecting multiple units. This cross-modal integration will allow the system to process information from different sensory channels simultaneously, leading to a deeper understanding and interaction with the world.

This concept, while ambitious, is grounded in decades of dedication and persistence by Jeff Hawkins. Following the official launch, Numenta promptly launched a progress page for the "Thousand Brains Project," promising continuous updates on its developments. The launch marks the beginning of its journey into practical application rather than the end of the Thousand Brains Theory. Much uncertainty remains about its future development, and predicting its success or failure is impossible. However, it is precisely this uncertainty that makes scientific research so exciting.